The 4 Machine Learning Models Imperative for Business Transformation

Deploying machine learning models to predict an outcome across a business is no easy feat. That’s particularly true given that data science is an industry in which hype and promise are prevalent and machine learning — although a massive competitive differentiator if harnessed the right way — is still elusive to most brands. There are a multitude of potential hurdles and gaps standing in the way of actuating models into production, including skills gaps (both internally and with vendors or providers) and the possibility that your data or the models themselves don’t possess enough integrity and viability to produce meaningful results.

Initiatives to enact and stand up machine learning-based predictive models to make products and services smarter, faster, cheaper, and more personalized will dominate business activity in the foreseeable future. Applications to transform business are aplenty, but it is highly debatable how many of these predictive models have actually been successfully deployed or how many have been effective and are serving their intended purpose of cutting costs, increasing revenue or profit or enabling better and more sublime customer and employee experiences.

Hi, we are Buckley & Cassidy, and this is a subject that has needed some clarity for quite some time. We’re glad that you’ve found this post, and especially glad that we finally found the time to get it out into your digital hands. To forewarn you, there’s a lot to unpack about machine learning models, and in this post we’re going to do just that…unpack the various elements of setting up and deploying a machine learning model that effectively predicts what’s needed to drive successful business outcomes. Once that foundation is laid, we’ll outline the top four predictive machine learning models every business should operationalize.

Now, even though we advise against skipping ahead as you’ll miss getting skilled up in this most cutting-edge topic, IF you want to jump ahead and dig right into the models themselves, simply click the anchor links below:

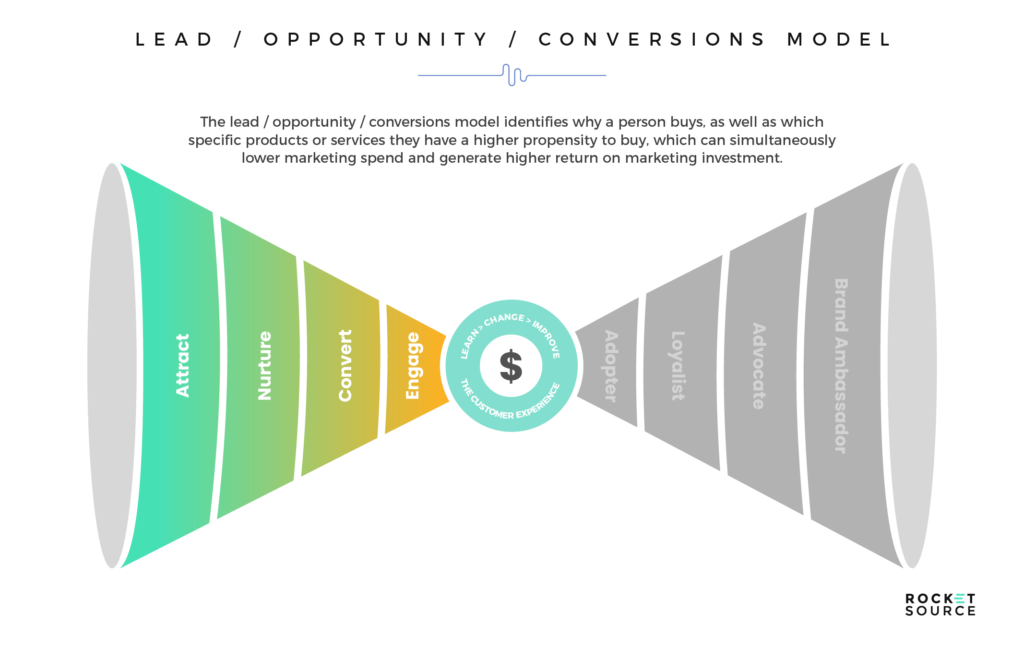

- Lead/Opportunity/Conversions Model

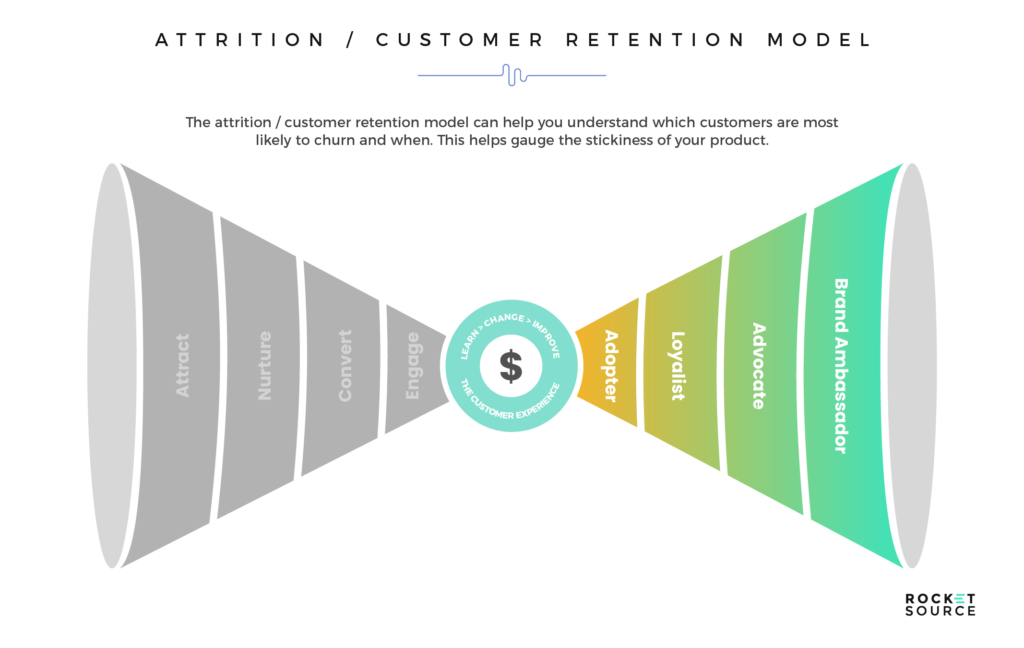

- Attrition/Customer Retention Model

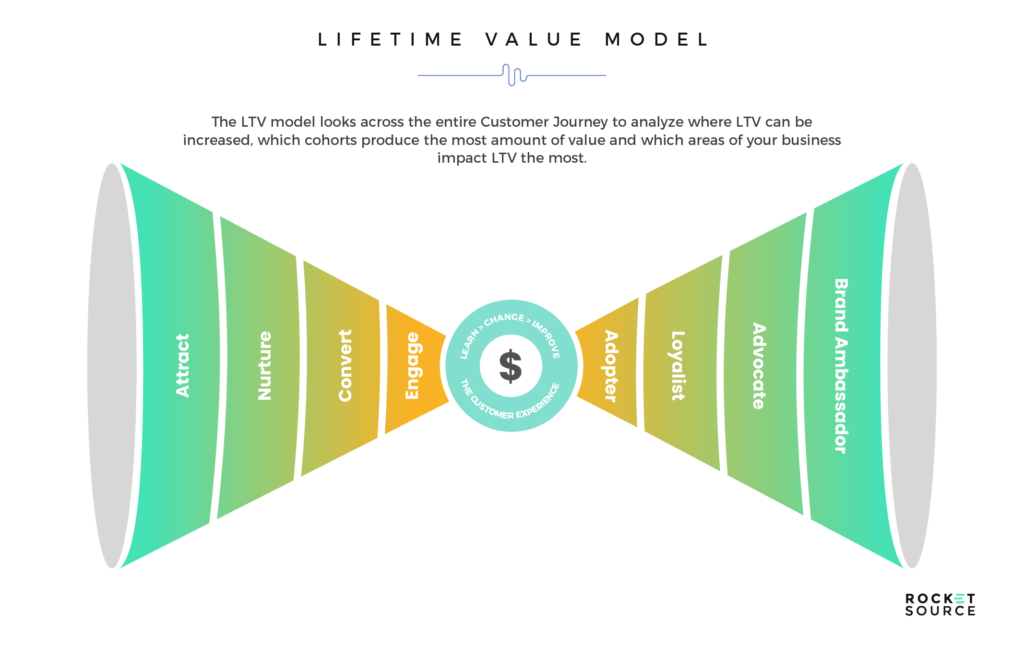

- Lifetime Value Model

- Employee Retention Model

In our years of consulting and growing new divisions and companies, there has never been a better time to look at, evaluate and invest in these four machine learning models to help steer new, modern campaigns and initiatives as well as drive insulated innovation and true transformation. The result of having these models in production has led to significant growth figures, and while we won’t and can’t share those figures and details explicitly, we can certainly show you what it may look like for your organization. On top of that, we’ve been so wowed by the results these models have generated that we’re also using them internally as we put the finishing touches on a new product in our Labs division. If, by the end of this post, we haven’t wowed you like these models have wowed us, please reach out. We’d love to benefit from your experience by learning what we should have or could have done differently.

For now, let’s dig in.

What Do Effective Machine Learning Models Look Like?

Before we get into the machine learning models you should be using, it’s important to note the difference between building a model in test, deploying the outputs of a model and assimilating a model into actual processes and business products and services for humans to use. Yes, it’s a complex job, but there’s an even greater, unseen complexity when putting machine learning into practice. As evidence of what we mean, take a look at one of Cassidy’s favorite quotes:

“Kaggle is to real-life machine learning as chess is to war. Intellectually challenging and great mental exercise, but you don’t know, man! You weren’t there!” – Lukas Vermeer

Kaggle is an online community that brings data scientists together to learn from and support each other while tackling major challenges. Even though courses and conversations might facilitate an atmosphere in which you’re forced to do mental gymnastics, they lack one core attribute — the actual emotional, human response consumers have to your brand with everything they experience at each touchpoint.

Moreover, there isn’t a one-size-fits-all approach to machine learning. With so much data at our disposal, it’s often a struggle to identify the focal points of each model to ensure that effective changes are made regarding the business’s goals and strategies. For example, how do you define a canceled customer? Is it someone who you’ve lost contact with after three weeks? Three months? What data points do you incorporate into the model to make strategic business decisions? Basic demographics or descriptive data such as gender, subscription type and residence aren’t going to do much when it comes to making strategic business decisions.

Said another way:

How can you build models that have an impact on the most vital areas of your brand, so that they bring value to your business?

Through the rise of technology, we’ve seen many businesses center their hopes and dreams on leveraging artificial intelligence (AI) as the driving force behind major organizational changes to encourage growth and stand the company up in highly competitive markets. Unfortunately, we’ve also seen a majority of those aspirations falter without the proper frameworks, alignment, rigor and forethought to back them up. Instead of creating “game changer” initiatives, hasty companies often end up with no more than expensive science experiments that have dismal success rates and low “attributable ROI.”

Having the proper frameworks in place ensures that you organically uncover where to start assimilating data and insights from the model into the appropriate processes and organizational materials. In doing so, you’re able to drive growth via digital transformation and economies of scale, bringing to light the metrics and targets around which models should be built. Before any of this can happen, you must lay the foundation for the production of your machine learning models.

Laying the Groundwork for the Production of Your Machine Learning Models

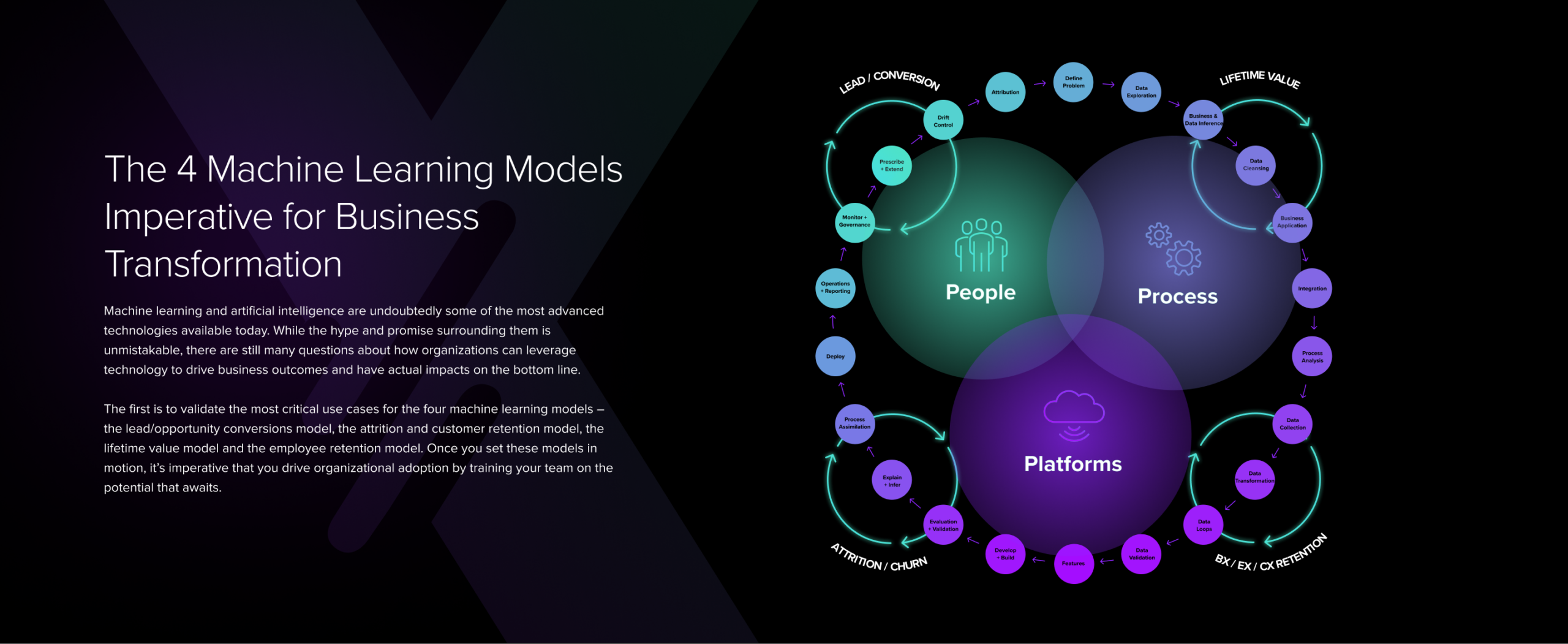

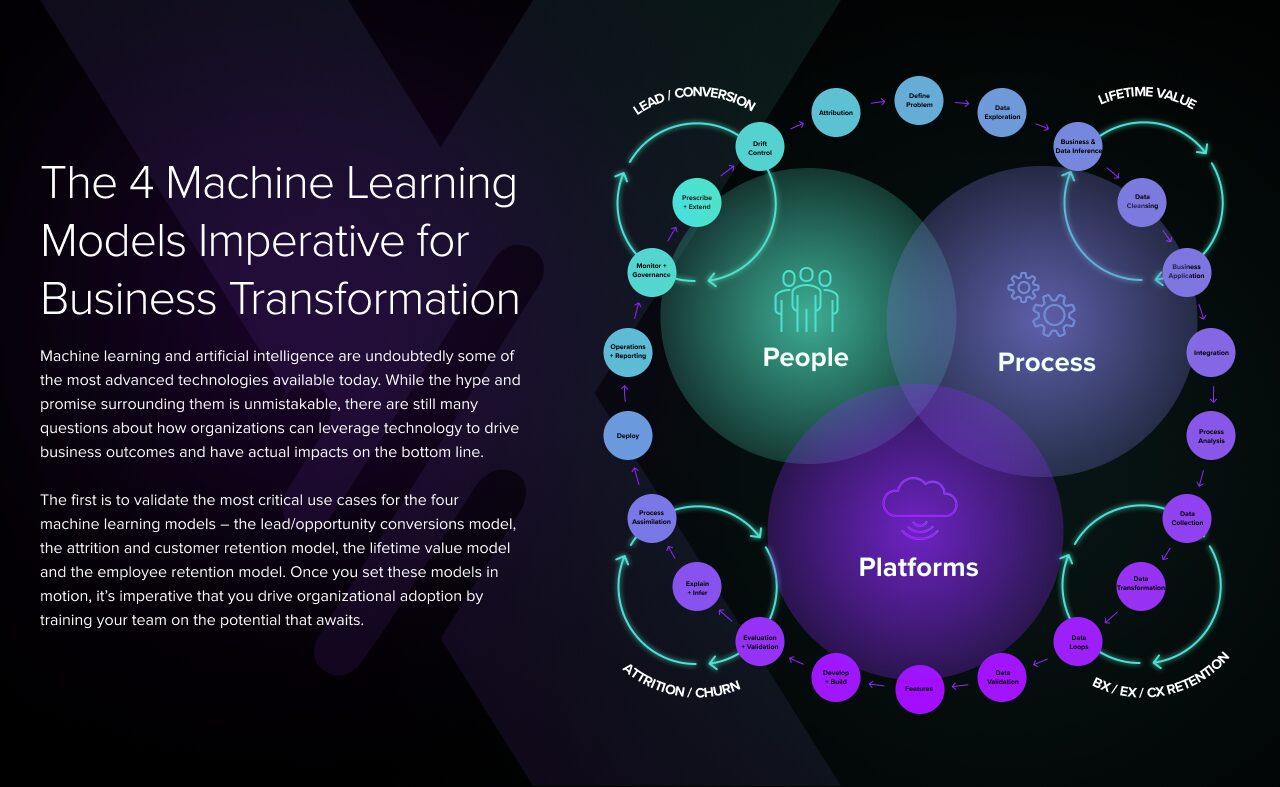

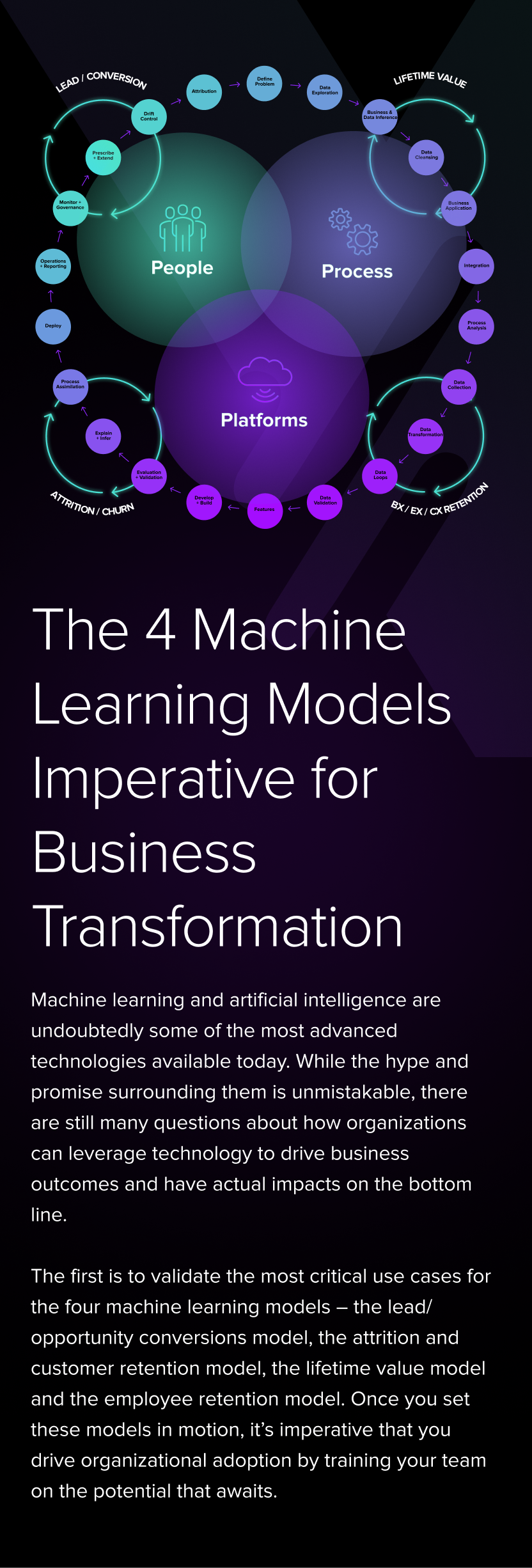

There’s a lot of hype out there about the possibilities of modern technology. After all, the more digital assets and data we have at our fingertips, the more we’re able to ratchet up our insights and move faster and more strategically. Still, despite these digital economies of scale, there’s no silver bullet for leveraging what’s out there. Although technology is getting increasingly sophisticated, it’s often overwhelming. There’s a surplus of assets that can be leveraged to formulate a strategy and roadmap centered around advanced analytics. Where do you begin? We believe it starts with the 3 Ps of your organization — people, processes and platforms.

The 3 Ps have a direct impact on the alignment and interconnectivity of combinatory systems, which include your ability to describe, interpret, explain and control the wealth of information you have at your disposal. Deploying technology without skilled people, sophisticated platforms or effective processes will more often than not lead to models being built but then failing to deliver actionable or valuable insights.

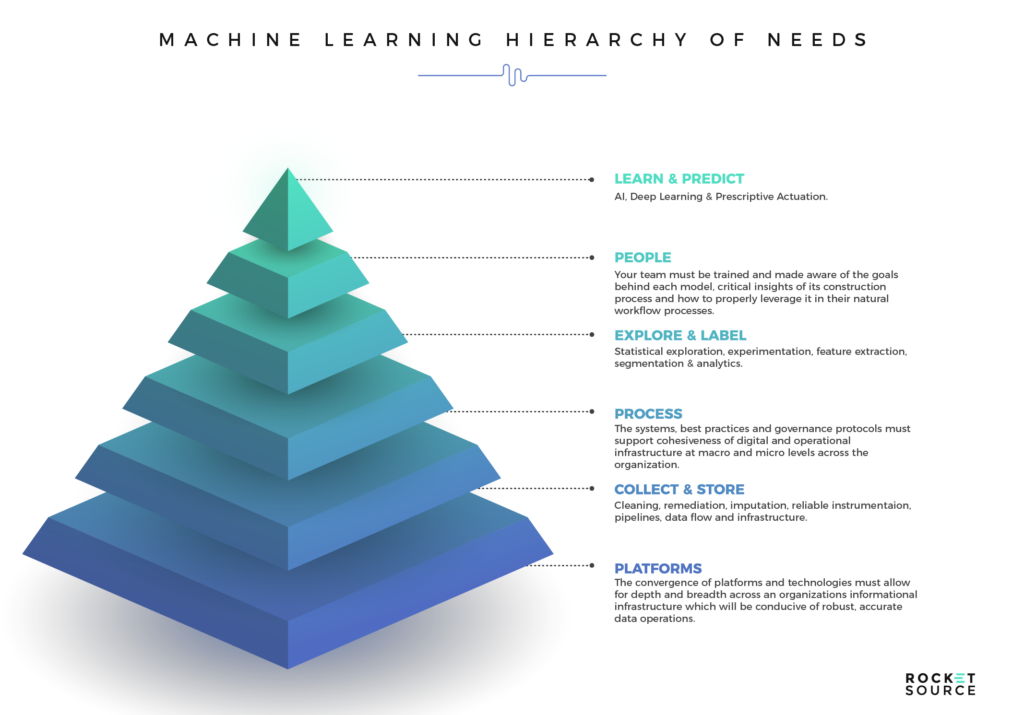

There’s a strict hierarchy of needs for driving machine learning to succeed. At the ground level of that hierarchy are the technology needed to put a new model in motion and the collection methodologies for gathering the necessary data. Too often, this is where organizations start and end, assuming that platforms will deliver actionable insights needed to transform. However, platforms are just the beginning. In order to adapt to today’s fast-paced market, you must also have the proper technology in place to collect and store the necessary data, and the proper processes for delivering clean data to your models. Once your model has that data, it must clean, aggregate, segment and analyze what’s available to deliver a predictive result.

The top two sections of this needs hierarchy are perhaps the most important. In order to leverage sophisticated technology, it’s imperative that you have people who are skilled enough to take the output generated by your machine learning models and translate it into something valuable to the business. Without that progression and skillset on board, it’ll be dang near impossible to fulfill the top tier of the hierarchy — to learn from the data and predict future market behaviors. Your people infuse human insight into the data and make decisions that will tug on the emotional and logical triggers of your target audience.

When done right, this hierarchy serves any business well, regardless of size or industry. We know this with certainty because we have seen it help dozens of organizations drive significant growth by transforming the way they work and helping them insulate their business via advanced digital transformation. Cassidy has years of industry knowledge and more battle scars than most when it comes to building and deploying predictive analytics across the front lines of organizations and Buckley has advised and implemented on data-driven digital transformation and innovation with some of the fastest-growing and biggest brands on the planet. From leading numerous intelligent customer journey mapping exercises to helping organizations humanize advanced journey analytics generated by machine learning models, the common and most sought-after goal is to uncover insulating innovation opportunities and growth potential as well as reduce the costs of doing business with the modern customer of today.

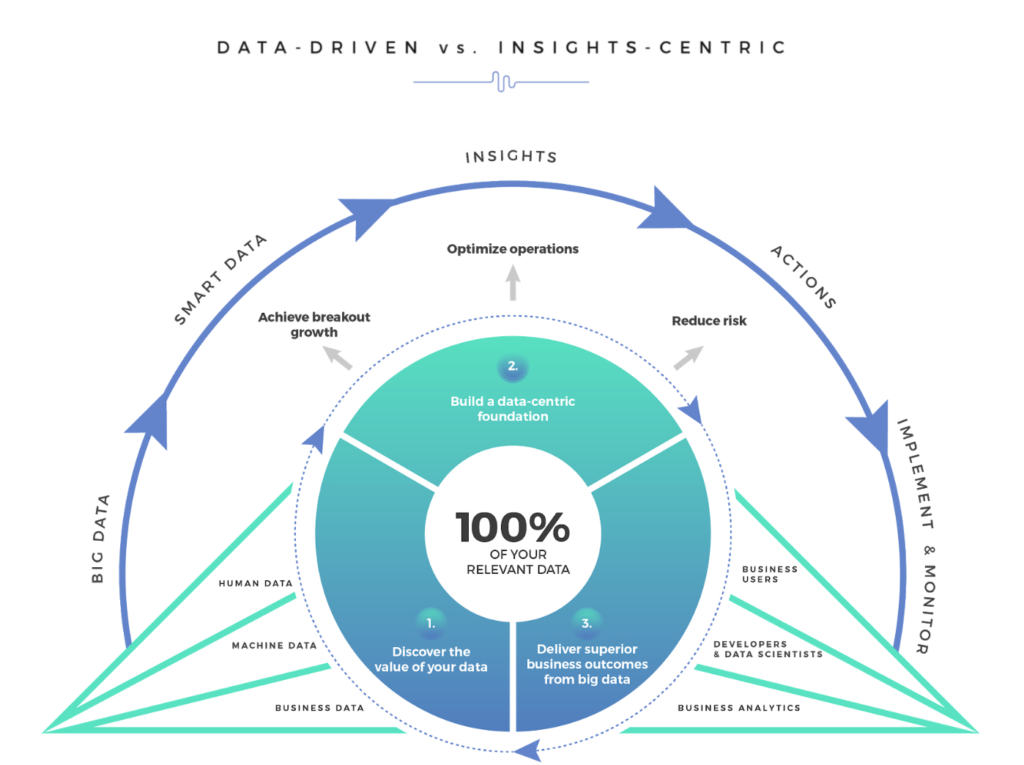

See the image below? We call this the Customer Insights Mapping (CIM) model.

Insights. That’s what every company is after, isn’t it?

This CIM Dashboard is the output of one of our core offerings — a customized (and potentially predictive) journey analytics dashboard which pinpoints the critical areas where companies are succeeding and failing across the entire customer experience. There isn’t a single company in the world that doesn’t want to uncover the precise moments in a customer’s journey where not only an implicit understanding of the customer’s journey is known but where predictive machine learning could add a dynamic competitive and insulating barrier against competition.

Not a single one.

We continue to recognize the need for machine learning models that drive business outcomes. But, without the proper frameworks in place to leverage human insight, you won’t have the people, platforms or processes necessary to learn and predict based on the output from your models. Repeated conversations with clients were the catalyst for this post — to show you how technology can be leveraged to deliver a beautiful end-to-end experience.

When it comes to digital transformation, you can’t rely on guesswork. Instead, you must have the right people and processes in place to take what’s available through the platform and turn it into something valuable for the business.

Using the Right Technology to Build Machine Learning Models

Nearly every business we sit down with is enthusiastic about the idea of pursuing machine learning and artificial intelligence, and is excited about the possibilities associated with them. However, to be ready for any kind of beneficial pursuit, you first need to know which of the model options will best suit your needs.

The technology-specific aspect of a machine learning or AI strategy should center around an organization’s ability to use what’s generated by the models. If a business isn’t equipped to process and benefit from this data, why build these models in the first place? It’s important to note that we are using the term “model” to refer to the spectrum of models out there today. For example, it could be robust, or it could simply mean a rules-based model built completely and unmistakably in Standard Query Language (SQL). Let’s dig in a little further, shall we?

Rules-Based (SQL) vs. Machine Learning

SQL is a basic language used to communicate with databases. In the past, when there was a very limited amount of data available, SQL thrived. As we moved into an era where Big Data is, well…BIG, managing the wealth of incoming data has taken on a life of its own. What worked yesterday will no longer work today. Data management now requires a more sophisticated approach — more sophisticated than what a simple rules-based approach can offer.

Before we jump too far ahead, if you’re not familiar with SQL, here’s an excellent video to provide more context to this discussion:

In the right situation, building advanced machine learning is immensely valuable for adapting to the increased amount of data at our disposal, but in many instances we can benefit from the simple rules-based approach offered by SQL. Let’s look closer at some of the benefits and limitations of SQL when it comes to understanding historical trends, behaviors, patterns and, ultimately, relevant insights.

Benefits of Using SQL

Organizations find a fair amount of value in a robust set of rules-based models for a number of reasons. First and foremost, in an SQL environment queries and their results are easily explainable and decipherable. You don’t need an advanced skill set to understand what’s being conveyed by the model, and disseminating the knowledge gleaned from SQL is simple and straightforward.

It’s also much easier to gain adoption of usage of SQL reporting. That’s because, in many instances, those using the data were likely involved in the performance in some way, shape or form. They already possess a level of context and have some skin in the game, which creates understanding and pre-conception. As a result, those people viewing the data are more open to adopting the insights gleaned and to optimizing processes based on what they’ve learned.

Limitations of Using SQL Exclusively

While there are certainly benefits to SQL, rules-based reporting definitely comes with its limitations. The old adage “garbage in, garbage out” couldn’t be more true in this realm. Labels, dimensions and overall integrity are completely dependent on the state of the data itself. If the data is dirty, whether from mishandling due to a lack of data governance or from poor collection practices, the end result won’t be accurate and could lead organizations down the wrong path.

Further, rules-based models are highly manual and are fixed. As data evolves and time passes, the interrelationships, correlations, etc. throughout the data will change. Still, regardless of this natural ebb and flow, the ability to build dynamic rules that evolve with the data is quite difficult.

Rules-based reporting in the form of SQL is, in large part, rear-facing or historical reporting. And, if we’re honest, even the most “real-time” solutions are historical. For example, a properly configured query could tell you the average number of page views your site had the Sunday evening after you launched a new ad, but it might not be able to help you predict outcomes for future ad launches.

Machine learning answers the challenge of future-facing predictions by using an algorithm to evolve along with the data over time without depending on a predefined set of rules. That’s because predefined rules are just the beginning of how algorithms deliver deeper insights to organizations today. Let’s keep digging.

Statistics vs. Machine Learning

As an organization’s information infrastructure matures, the most appropriate next step is to begin adding advanced analytics. We use the specific term advanced analytics with purpose in this context for two few reasons:

- It assumes migration from historical analytics into current and future based analytics

- It encompasses statistical analysis as well as machine learning

That last point is important. Statistical analysis and machine learning are not one and the same. In fact, depending on who you ask, you may hear some emphatic opinions about the differences between the two approaches. We won’t try to wrap up this ongoing debate in just a few short paragraphs (yeah, even we’re not that brave), but we can try to summarize it for the sake of our audience’s desire to derive more meaning from their analytics and models. You likely do not care whether an optimized set of data-driven processes and protocols are explicitly defined as statistical modeling vs. machine learning or predictive modeling, but you do care how both approaches impact you, so we’ll simplify the differences to help you know what you need to in order to create meaningful analytics and models around your business objectives.

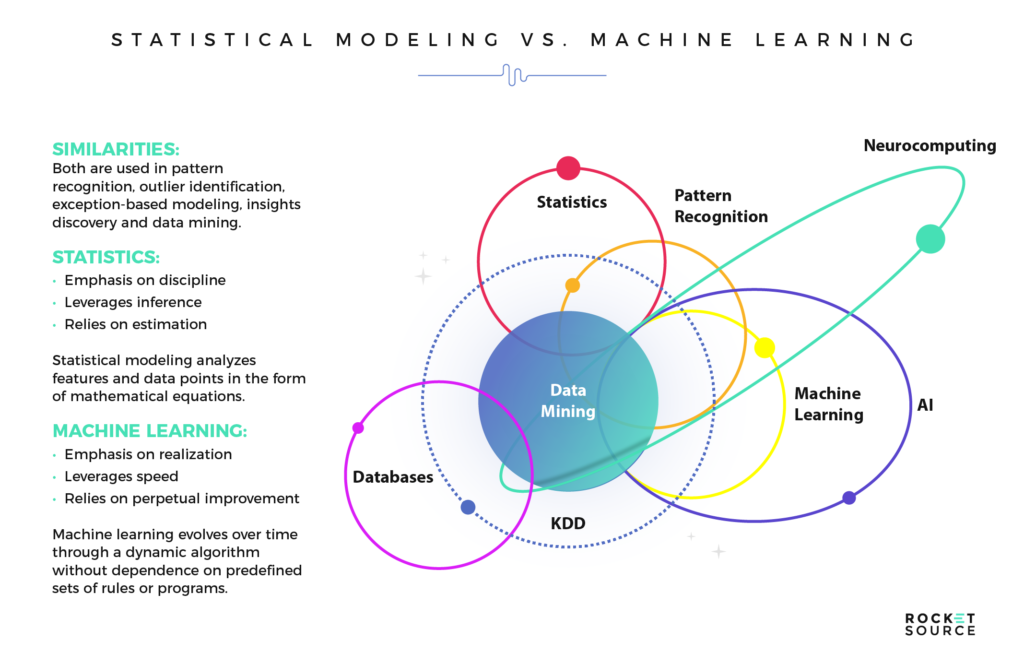

In their simplest forms, both machine learning and statistical analysis serve the same purpose — to gain a deeper informed understanding of a given data set. Both are used in pattern recognition, outlier identification, exception-based modeling, insights discovery and data mining, and are often leveraged to solve the same, or similar, problems. Still, they have their differences.

Statistics emphasizes discipline and leverages inference and estimation. It is the development and translation of interdependence between features and points in the data in the form of mind-bending mathematical equations. Machine learning, on the other hand, emphasizes realization, speed and what we would call perpetual improvement. It is dynamic in that an algorithm evolves along with the data over time, without dependence on a predefined set of rules that are in the form of programs.

The irony (or lack thereof) is that, despite the similarities between machine learning and statistical modeling, each approach has its own independent genealogy. Machine learning is a member of the computer science and artificial intelligence field. This field’s focus is building systems that can learn from data in a dynamic way, instead of relying on rules and absolute programming. On the contrary, statistical modeling is a member of the mathematics field. Over time, as both the volume of data available for modeling and the computational ability to model data have grown, data scientists have been able to build technologies (algorithms) that can learn by analyzing data. Statistical modeling techniques have existed for hundreds of years — before computers were even thought of — while machine learning is relatively new to the scene.

A good example of the differences between machine learning and statistical modeling outcomes when solving the same problem can be seen on the graph below from McKinsey & Company’s article outlining machine learning for executives. With one look, the differences between statistical analysis and machine learning models in reporting on the ever-so-common telecom customer churn example are easy to spot.

A statistical approach in the form of a classic regression analysis presents itself as the simple green line across the center of the chart. The model’s findings, on the other hand, are visualized using gorgeous colors and bubbles to emphasize segregation and parameters for which customers have a higher or lower probability of churning. Machine learning goes well beyond the simple semi-linear boundary, allowing for a deeper and more nuanced delineation of high and low probabilistic areas throughout the data as it pertains to the two features being visualized. We’re willing to bet that telecom industry employees can gather more insight from the machine learning model’s output because it allows them to better identify and predict the customers with the highest risk of churning versus a simple regression line.

Although machine learning models might seem like the best option because of the detail they provide, that might not always be the case. The lines between statistical modeling and machine learning continue to blur around usage and applicability in the business. In deciding which is right for you, it’s important to keep in mind whether you’d benefit more from the straightforward answers of statistical analysis or from the more nuanced insights of machine learning predictions. The reality is, there are complementary uses for both statistical modeling and machine learning and each should be at an organization’s disposal when needed.

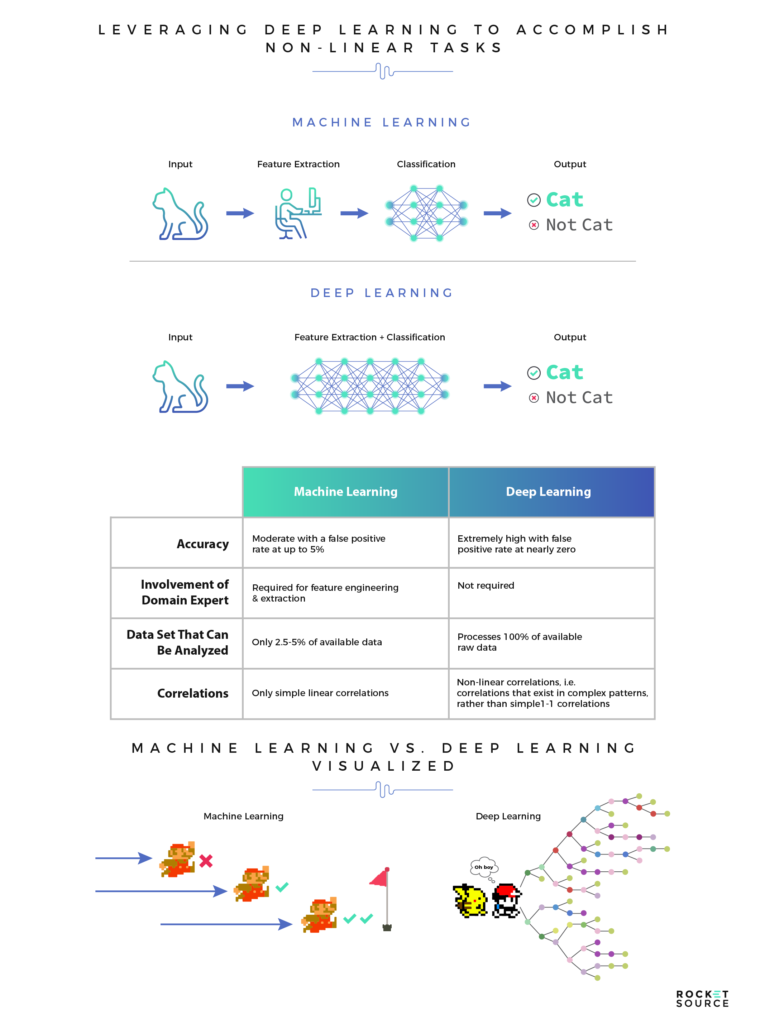

Deep Learning vs. Machine Learning Models

One topic we find very interesting and will freely admit consumes much of our free time is machine learning. We devote considerable time to researching meaningful business-level topics related to AI, deep learning and machine learning. Deep learning, in particular, is fascinating, but it is often confused with machine learning. Specifically, confusion arises when considering the potential application of either in terms of understanding the historical and likely future behaviors of humans and the correlation of those behaviors with business outcomes.

If you’re unsure of the core differences between the two, this brief video by MATLAB gives an excellent quick and digestible overview.

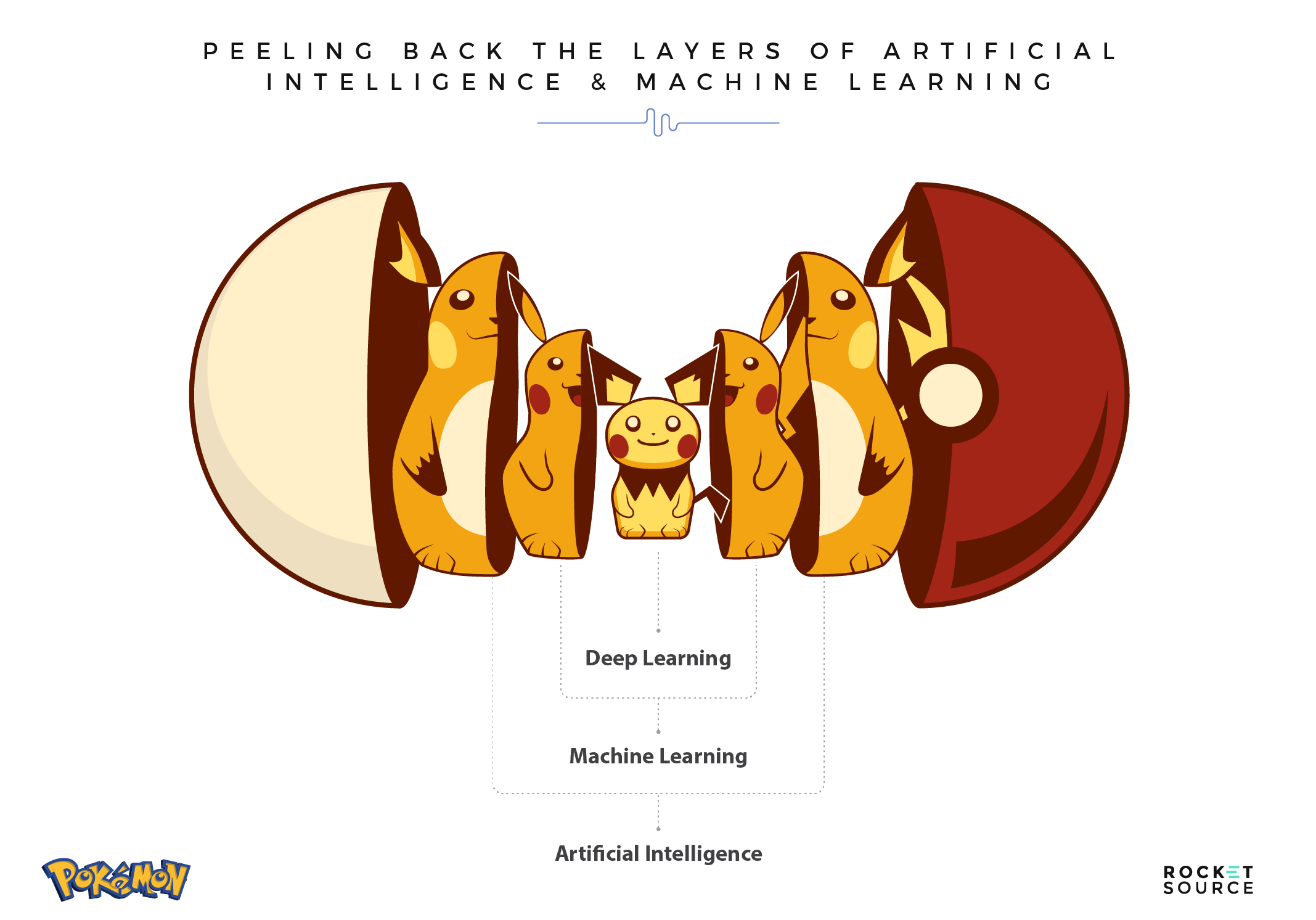

Machine learning requires a person to manually select certain features to learn from. Those features are then used to classify new objects, patterns or behaviors. Deep learning also occurs over time and is based on a feature set, but those features are identified by the model itself rather than being inputted by a person. In its simplest form, deep learning is a subset of machine learning. Machine learning is a subset of AI. Each are important and serve a purpose in the business world.

Artificial Intelligence

AI has been around since the 1950s, and is typically defined as the capability of any modeling approach to imitate human behavior or to solve problems and perform tasks like a human.

Machine Learning

Machine learning originated in the 1980s. While still old by modern terms, it is significantly more progressive than AI. Machine learning is infused with AI techniques allowing computers to learn patterns and behaviors without explicit programming. This is a big deal. Just like the value of a brand new car is in perpetual change as soon as it’s driven off the lot, so is data. Because of this perpetual change, pre-defined rules such as if/then statements can become less effective or increasingly obsolete as time passes if they are not tended to. The dynamic state of machine learning means that it remains in a perpetual state of optimization. In other words, machine learning algorithms are continually working toward an optimal likelihood that predictions are correct as data evolves. This is typically described as a loss or error function. As new and fresher data is added to the model, the optimization is dynamic.

Deep Learning

Deep learning is a subset of machine learning and an even newer and more sophisticated approach, originating in the first decade of the 21st century. Deep learning allows for a comprehensive analytical measure of intelligence for machines rather than solving for a single set of problems. The models rely on features to influence their output, leveraging deep neural networks (layers) of algorithms to solve a problem in every possible way.

To best illustrate this point we’re turning to an unlikely source — classic video games. Specifically, let’s look at Pokemon and Mario. A machine can beat Mario, but not Pokemon. Why is this? In Mario there is one goal — to make it to the end of the level without dying by moving from left to right and avoiding obstacles. The closer you get to the red flag at the end, the better you’re doing. A machine is typically able to quickly beat computer games like Mario because once it’s learned the details of each conflict, it can pass each level nearly every time.

The game of Pokemon is a little different. For those of you who are unfamiliar, Pokemon doesn’t have a singular end goal. Players don’t move in a linear fashion across the screen but rather are met with a series of options to choose from. What they choose depends on their personal end goal. Some players might choose to go to battle while others choose to move to a new area in the game. The further you progress, the more the options branch out into new scenarios, further increasing the options available. This game requires more of a deep learning model to assess every possible combination of extracted and classified features, drop them into a “black box” form and then deliver an output with the best possible routes for various goals. If this sounds like a daunting task, it is. Feature engineering is labor-intensive, can be complicated and requires a fair amount of time and expertise.

As you can imagine, the level of accuracy of predictions is significantly higher when deep learning approaches are leveraged versus machine learning. Deep learning is often leveraged when non-linear correlations and complex pattern recognition are needed to identify relevant information. In other words, when you’re not moving Mario from left to right on the screen, but are strategizing your next best move in a large open Pokemon map. Let’s be clear though, this doesn’t mean that deep learning is the one-size-fits-all solution. There are many use cases in which machine learning is much more appropriate than deep learning for a number of reasons. For example, machine learning can successfully be used (and is typically used) in moderately large data environments with less accuracy-restrictive problems.

To know what’s right for you, consider the context of your data and the output you hope to achieve. In contrast to machine learning, where there are typically a few thousand data points, specific problems that require deep learning tend to deal with several million data points. Further, the output of a deep learning model can range from a score, number, element, image, audio, text, etc., whereas the output of a machine learning model will typically be a simple number like a classification score (probability) or an integer. Knowing what you have available and what your end goals are is crucial when deciding which approach makes the most sense for your organization. In other words, newer isn’t always better.

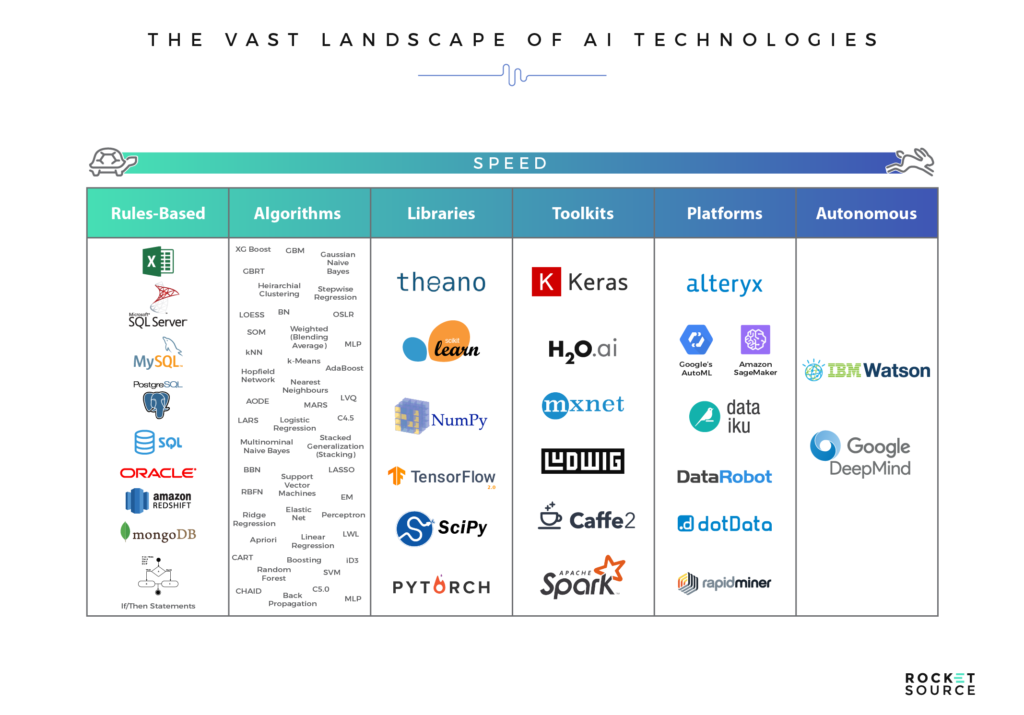

Algorithms, Libraries, Toolkits and Platforms… Oh My!

There are a multitude of technologies and frameworks on the market today that enable data scientists and machine learning engineers to build, deploy and maintain machine learning systems, pipelines and workflows. Just like any economic matter, supply and demand drives the improvement and progress of the product. As the use of machine learning in business increases, so does the number of frameworks and software that facilitate full-fledged machine learning workflows.

Because of this influx of new tools, the days of coding up a given algorithm in R and writing API configuration logic from scratch to set up a machine learning workflow are gone. Unless you like to code in R for fun, there’s no longer a reason to do so. That shift isn’t obvious though.

Recently, we were on the phone with a long-time client who inquired about the best way to leverage her skill sets in R and Python to assimilate some basic algorithmic logic into a data virtualization environment. After discussing many different scenarios, it was clear that a set of automated, open-source libraries and toolkits were likely the most ideal setup to achieve an impressive time-to-value. A machine learning environment would have been better because it could scale and ultimately become an automated platform. Even a seasoned engineering and operations team can be unaware of some of the machine learning solutions available to them today which, in large part, are open source.

Modern machine learning solutions allow for minimal latency and impressive accuracy on models due to the automated aspects of many of the libraries and toolkits available.

Unless there’s a definitive reason for tuning and optimizing in order to achieve alpha, you simply don’t have to go through this part of the “old-fashioned” machine learning workflow any longer. There are many options and technologies that will do this in an automated fashion. If your head spins as you look at the options available to you, you’re not alone. There’s a lot out there to choose from. Depending on the focus and maturity of a given machine learning workflow, algorithms with raw code, libraries with broader capabilities, toolkits that offer various levels of automation or full-fledged automated platforms each have their potential place in an organization’s AI stack. To help you understand just how much is available to you, let’s summarize the landscape of options independently and as a whole.

You can see just how many layers there are for you to sift through and choose from. Deciding which option is right for your specific business goals, maturity and infrastructure can be difficult. Let’s break it down.

Algorithms in Manual Workflows

Algorithm selection is a critical component in any primarily manual workflow. The problem is that there’s no one-size-fits-all answer to which algorithm will work best across the board. There are several steps that are optional on a case-by-case basis. Testing several different algorithms in order to identify which one provides the best accuracy for a given set of data is critical. Still, in a manual workflow, relying on people to make the decision about which algorithm to use can be a lengthy process riddled with errors, even when those people are the best data scientists in the industry.

Automated and open-source machine learning cuts through the mess of configuration, getting you into production faster so you can focus on the application to the business rather than on trying to get something meaningful built. Most of the automated products nowadays will perform just as good as, if not better than, a manual model because of their ability to parse and compare multiple algorithms to find the best-performing one specific to the dataset of a given model.

Libraries

Libraries are frameworks of procedures and functions written in a given language. A comprehensive arrangement of libraries allows data scientists and machine learning engineers to execute complex functions without having to originate or rewrite lines of code.

Machine learning codes can be written in many languages, including R and Python. Both of these languages allow for extensive mathematical calculations, optimization, statistics and probabilities. However, one of Python’s powerful capabilities is its extensive set of libraries. Most of the commonplace libraries possess an API that Python can leverage in order to create automated integrations to critical systems and applications, making the language a great means for developing integrated workflows. Let’s look at two of Python’s more popular libraries.

Scikit-learn

Scikit-learn (SKL) is a commonplace library used by developers and organizations across the world. It features most of the classical supervised and unsupervised learning algorithms including linear regression, logistic regression, Gradient Boosting, Naive Bayes, PCA, K-Means and Support Vector Machines, to name a few.

Scikit-learn (SKL) is a commonplace library used by developers and organizations across the world. It features most of the classical supervised and unsupervised learning algorithms including linear regression, logistic regression, Gradient Boosting, Naive Bayes, PCA, K-Means and Support Vector Machines, to name a few.

One critical component, which is sometimes overlooked, is its diverse capability in data preprocessing and results analysis, explainability and reporting. Because traditional machine learning algorithms are SKL’s heritage and niche, this library stays maniacally targeted on these components. Although helpful, some data scientists assert this as a weakness because of the limited capabilities for interfacing with neural networks and deep learning-worthy initiatives.

TensorFlow

TensorFlow (TF) is a machine learning- and deep learning-capable library built by Google. It leverages a multitude of traditional machine learning algorithms for both classification (binary and multi) and regression analysis.

TensorFlow (TF) is a machine learning- and deep learning-capable library built by Google. It leverages a multitude of traditional machine learning algorithms for both classification (binary and multi) and regression analysis.

Because of its expansive deep learning capabilities, the library is a bulky framework compared to SKL. To this end, the two libraries are often configured into workflows together in a cohesive set of systems and logic, which often starts in SKL and matures in an incremental fashion into TF.

In addition to SKL and TF, there are many libraries available to data scientists and machine learning engineers. But, it won’t matter which library you use if you don’t first understand the problems you are attempting to solve, the tangential factors, and various levels of maturity that exists across the organization. Only once you have that understanding can you select the library, or set of libraries, that will allow for an optimal and consumable workflow.

Toolkits

Toolkits are often built on a set of algorithms and libraries with various levels of “non-code” interfaces that allow the non-data scientist to leverage these powerful capabilities without having to draw up hardcore code. There are a few critical points specific to these toolkits.

- Automation: Most machine learning workflows require a heavy amount of cleaning, integrity accommodation, feature engineering, fitting, parameterization, etc. that most of these toolkits automate. This automation ensures that a non-technical analyst who may not understand best practices of proper imputation, encoding, cleansing, binning and feature engineering likely could still leverage an open-source toolkit to execute a machine learning and deep learning model into production.

- Dynamic Algorithm Integration: Many seasoned developers integrate toolkits in their workflows today for the ability to dynamically leverage the best-fitting algorithm for a given dataset. The ability to bypass some of the more labor-intensive preparation and preprocessing makes sense for those looking to achieve democratization of their models and competitive time-to-value.

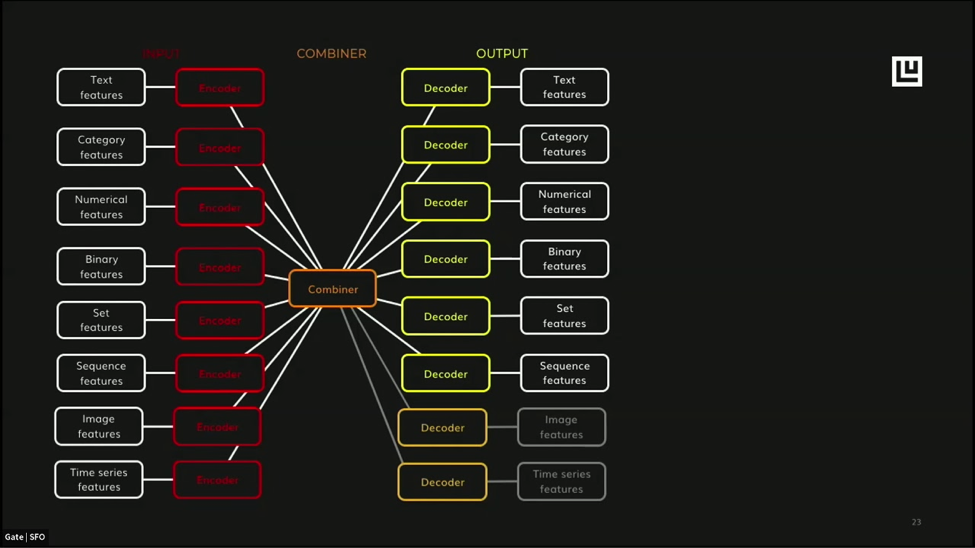

So, what does this look like in the real world? Take Uber’s Ludwig toolbox as an example.

Ludwig is built on top of TensorFlow, which means it has automated deep learning capabilities of features and classifiers so that models don’t have to be coded manually. Although automated, the model configuration is still done in various protocols, including a combination of command line and Python code. Therefore, we mere mortals (yes, that’s directed at you Piero Molino) still might assert that some code is required.

The Ludwig toolkit dropped in 2019 and we can vouch for its capabilities. In fact, we use it here at RocketSource on a regular basis for the reasons listed above. Ludwig is a prime example of an extensible, out-of-the-box toolkit. Whether you’re building a deployable machine learning or deep learning model, having automated accommodations around imputation, encoding, integrity and more in a competitive period of time with accuracy is critical when developing a workflow that can be configured and repeated over and over again in an organization.

Platforms

Organizations in which executives champion machine learning and teams have widely adopted it typically invest in machine learning platforms. These platforms enable a broader user base and leverage the provider’s expertise in scoping, configuration, deployment, adoption and maintenance of a given model or set of models.

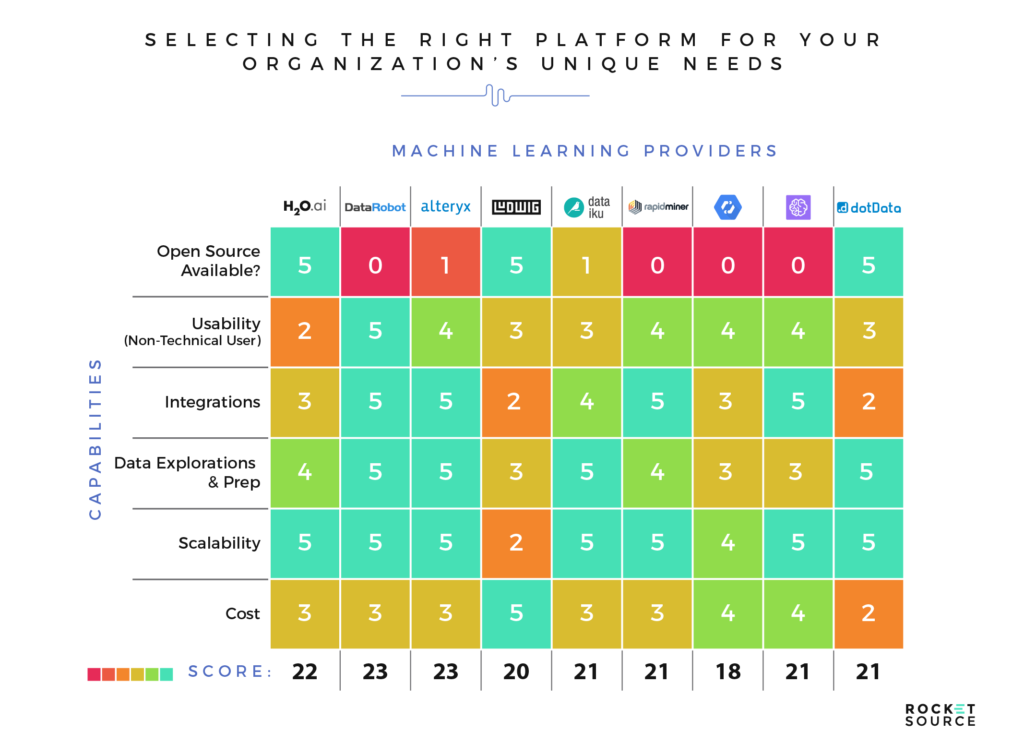

There are many comparable platforms on the market today. At their foundations, most platforms possess the ability to scope, configure, deploy, adopt and maintain, while many include additional integration, interface and inference capabilities. These granular capabilities are important to consider as you decide which platform is right for you. To help our clients at RocketSource, we leverage various protocols in running a Cost-Benefit Analysis similar to the one you see here:

In a Cost-Benefit Analysis, we outline a set of critical parameters and requirements as they relate to the business through a set of scoping and strategic efforts. The goal is to surface the most appropriate technologies for the business based on its current state and short-to-long-term goals. One of the best-known and widely adopted platforms with these capabilities is Alteryx. Although we’re strictly “platform agnostic” here at RocketSource, we’ll focus on Alteryx for the sole purpose of adequately articulating the typical functionalities we solicit to build out a Cost-Benefit Analysis around the many automated machine learning platforms available today.

Alteryx

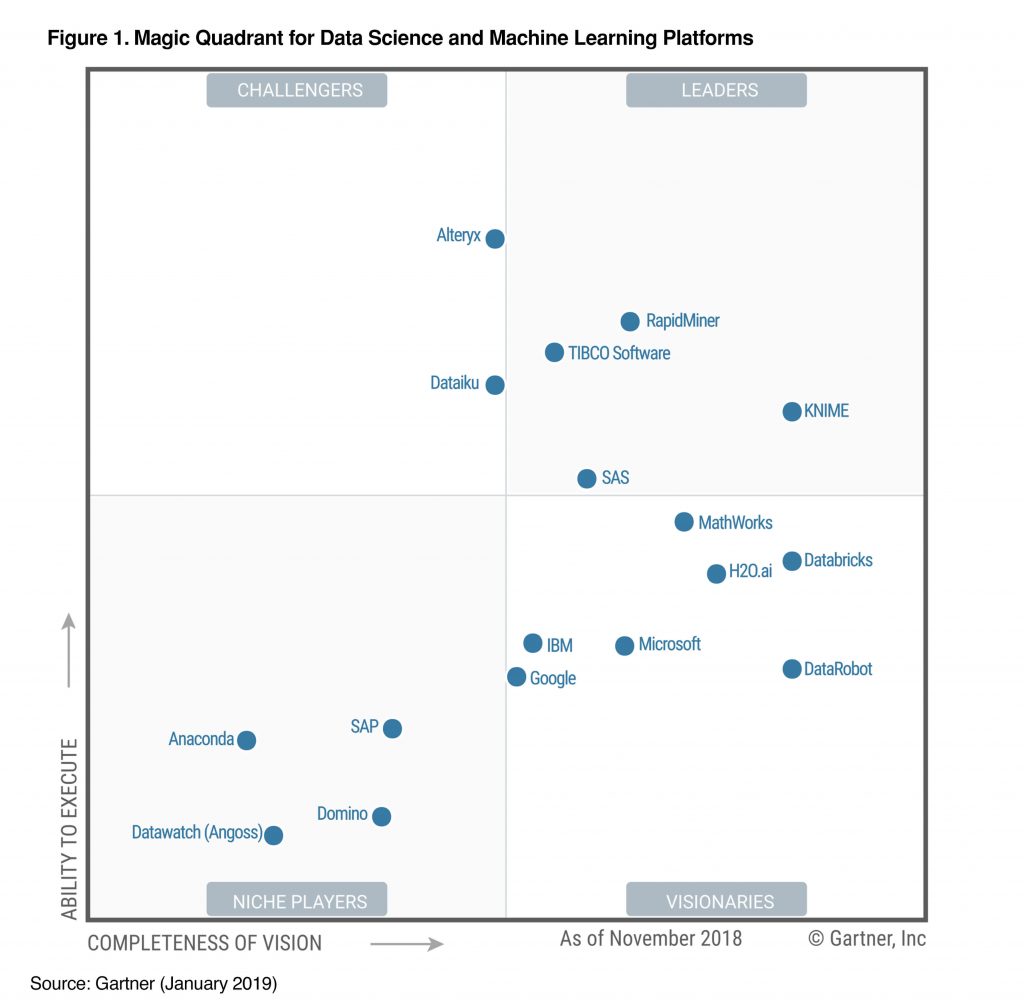

Alteryx continues to be a relevant competitor in the machine learning industry. In fact, in Gartner’s Magic Quadrant for Data Science and Machine Learning, Alteryx is one of only two ‘Challengers’ to the industry leaders.

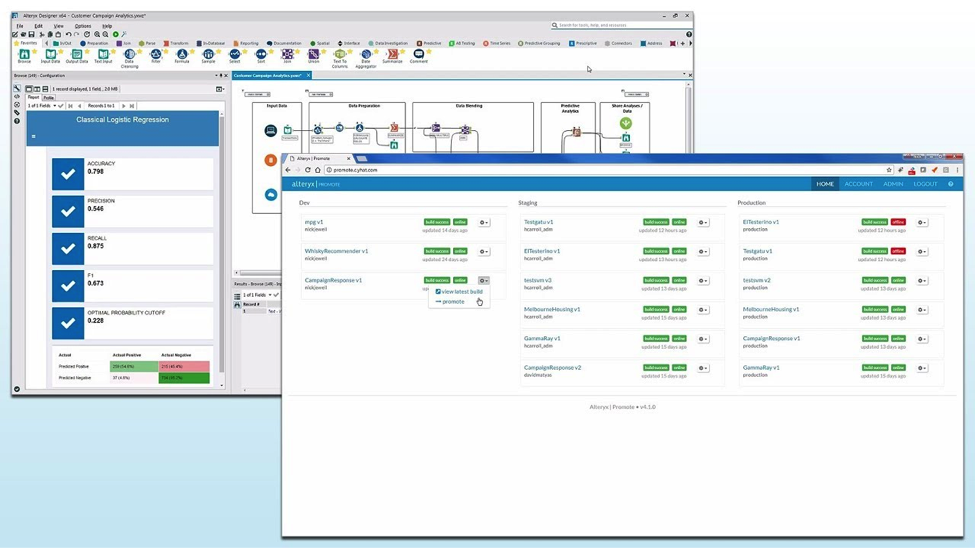

Alteryx hangs their hat on their ability to make data science available to citizen data scientists and the end-to-end users they’re servicing on a daily basis. Like most of the platforms available today, Alteryx boasts a graphical user interface (GUI) that allows for usage and consumption by the broader layman. It looks something like this:

Although the GUI is easy to digest, the workflow includes rich in-product capabilities including data integrations/querying, manipulation and preparation as well as feature selection and model execution. These capabilities are performed on a canvas that allows for commenting and markups for the articulation of critical workflow and configuration information. Further, the underlying code of a given model configuration is made available to be leveraged and executed in various languages including cURL, R, Python, Ruby, Java, PHP and even SalesForce. Model types and supporting algorithms for numerical predictions include Linear Regression, Spline, Gradient Boosting and Gamma. For classification models, Boosting, Logistic Regression (Binary), Decision Trees, Random Forest and Naïve Bayes algorithms are available. Once models have been inferred or surfaced based on fit of the aforementioned algorithms, predictions are rendered into the database or primary data repository of choice and outputs are disseminated accordingly.

Like many platforms, Alteryx integrates seamlessly with most of the major data repositories and technologies. We counted 89 total integrations on their website. These integrations allow for a level of scaling and reach that is quite impressive. While this all might sound technical in nature to a non-data scientist, the way the platform disseminates knowledge using these capabilities makes data digestible across the organization.

As mentioned before, Alteryx isn’t alone in offering capabilities like these. Although they’re an excellent competitor in the industry, other platforms may be better suited for an organization depending on its unique needs. The key to choosing the best platform for your organization is to identify which problems you’re trying to solve using machine learning models.

The Approach to Building Machine Learning Models

Hopefully by now, you’re starting to get the big picture of advanced analytics and the basic items needed to power an intelligent model — not just any model, but one that can be predictive in nature and can be a defining competitive differentiator.

Before you can develop an advanced analytics strategic roadmap — something we at RocketSource are well-known for building and operationalizing — you first need to identify the business opportunities that are most conducive to modeling. As you’ve seen, modeling can be approached in a multitude of ways, from rules-based with SQL to deep neural networking. Although it’s valuable to understand the different types of learning available so you can marry the problem you’re trying to solve with the most effective approach, it can also be detrimental. Trying to dig into each of the machine learning models to the nth degree can send you down the wrong path. Instead, starting with a clear business objective will help you create a model that’s appropriate, accurate and decipherable.

Leveraging the Right Learning Structure

In an ideal scenario, the outcomes of machine learning models are highly relevant and applicable, and resonate across the organization. In a not-so-ideal scenario, there’s a hazy gray area concerning the delineation of characteristics from one class to the next, which can limit the actionability and final value of the model. Knowing the difference, as well as knowing your objectives, will help you determine whether the model is worth leveraging, or if the results would get caught in that gray area, making the model less actionable and valuable.

It’s important to address something here. That possible gray area and lack of valuable deliverables have the potential to dampen the hype around machine learning models. Our goal isn’t to put out the flame on such a hot topic. Instead, we aim to educate while setting the proper expectations around each model.

Ultimately, if your models are not applicable, actionable and relevant, those efforts will surely affect your team’s morale and your organization’s incremental Internal Rate of Return (IRR).

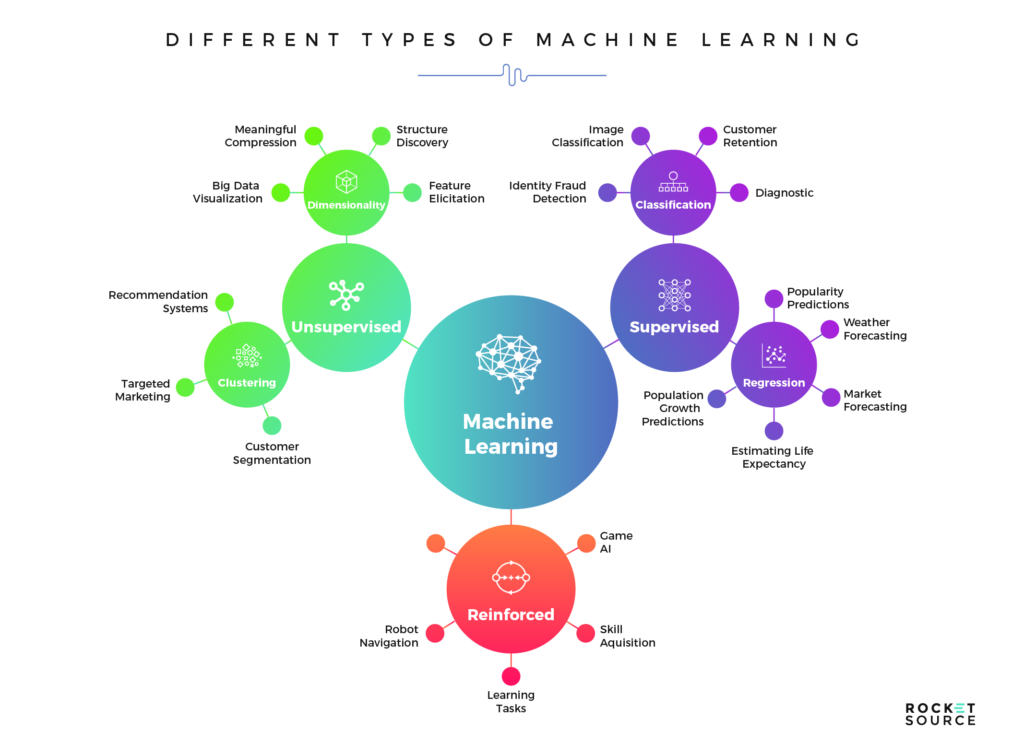

Before you can dig into a specific problem, it’s useful to understand what the process could look like, how to approach your learning, and what the possible outcome opportunities are. The first step in this process is to understand the various learning structures available to you — supervised, unsupervised and semi-supervised.

For a high-level understanding of the difference between these learning structures, think of machine learning as a student and the learning structure type as a school. Within the school, there are classrooms where teachers are present and actively training students using predetermined guidelines, as in supervised models. Unsupervised models are more like the playground. These models are let loose to run free and make up their own set of rules. Reinforced, or semi-supervised models are like the gymnasium where there are certain rules in place, but models are given freedom to explore and come up with new ideas. Let’s take a closer look at each learning structure to help you understand which model makes the most sense for you.

Supervised

Supervised learning takes an input variable (x) and maps it to an output variable (y), empowering you to make predictions for a particular data set. We call this supervised because it mimics the process of a teacher supervising students throughout the learning process. Once the algorithm is performing sufficiently, it stops learning.

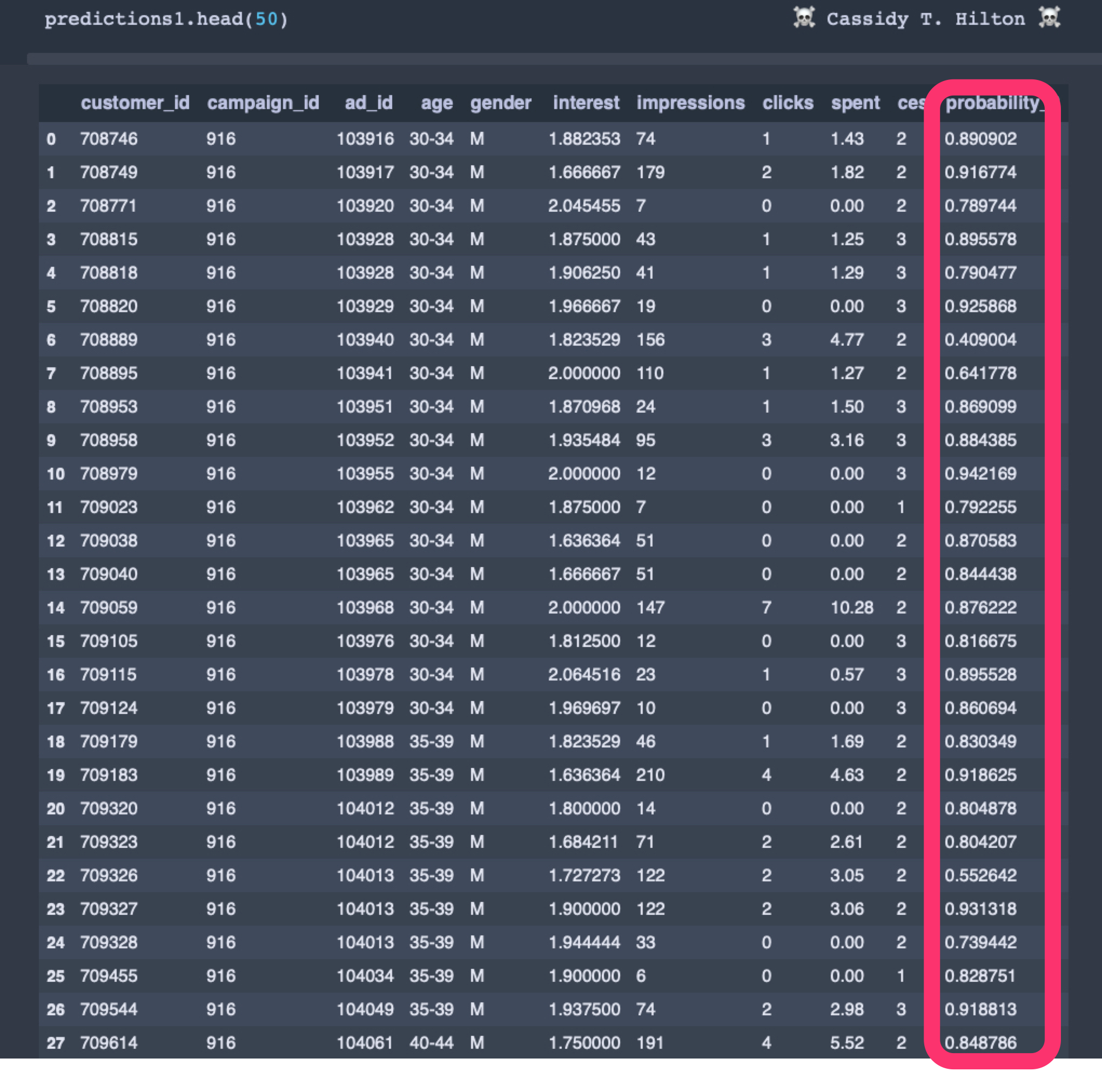

To illustrate how this works, let’s look at lead targeting, which looks for a simple yes or no answer regarding whether a lead is likely to convert. This answer would give us an actionable next step — to target the lead or not. To run this model, we would leverage a similar dataset with one record for every lead in our data and a classification algorithm (Logistic Regression, Gradient Boosting Trees, Random Forest, K-Nearest Neighbors, Support Vector Machines, etc.) that would attach a probability score to each lead.

That probability score could be leveraged to infer the most worthwhile leads to target. It also allows for cross-analysis of the most valuable areas for the business to target, such as geographic, demographic, specific campaigns, experiential scoring cohorts, etc.

Unsupervised

Unsupervised learning involves input data (x) only. The goal of unsupervised learning isn’t to get an answer from the dataset but rather to learn more about the data you already have. Two types of algorithms are used to analyze and group data — clustering and association. Here’s a quick description of each:

- Clustering algorithms identify clusters, or groups, of cohorts with similar purchase behaviors.

- Association algorithms identify common attributes among the data, helping to predict future behavior patterns through past indicators. For example, people who buy X are more likely to buy Y.

Neither of these cases rely on a specific output to come up with a yes or no answer. Instead, algorithms in an unsupervised learning environment look for patterns within the data to draw conclusions.

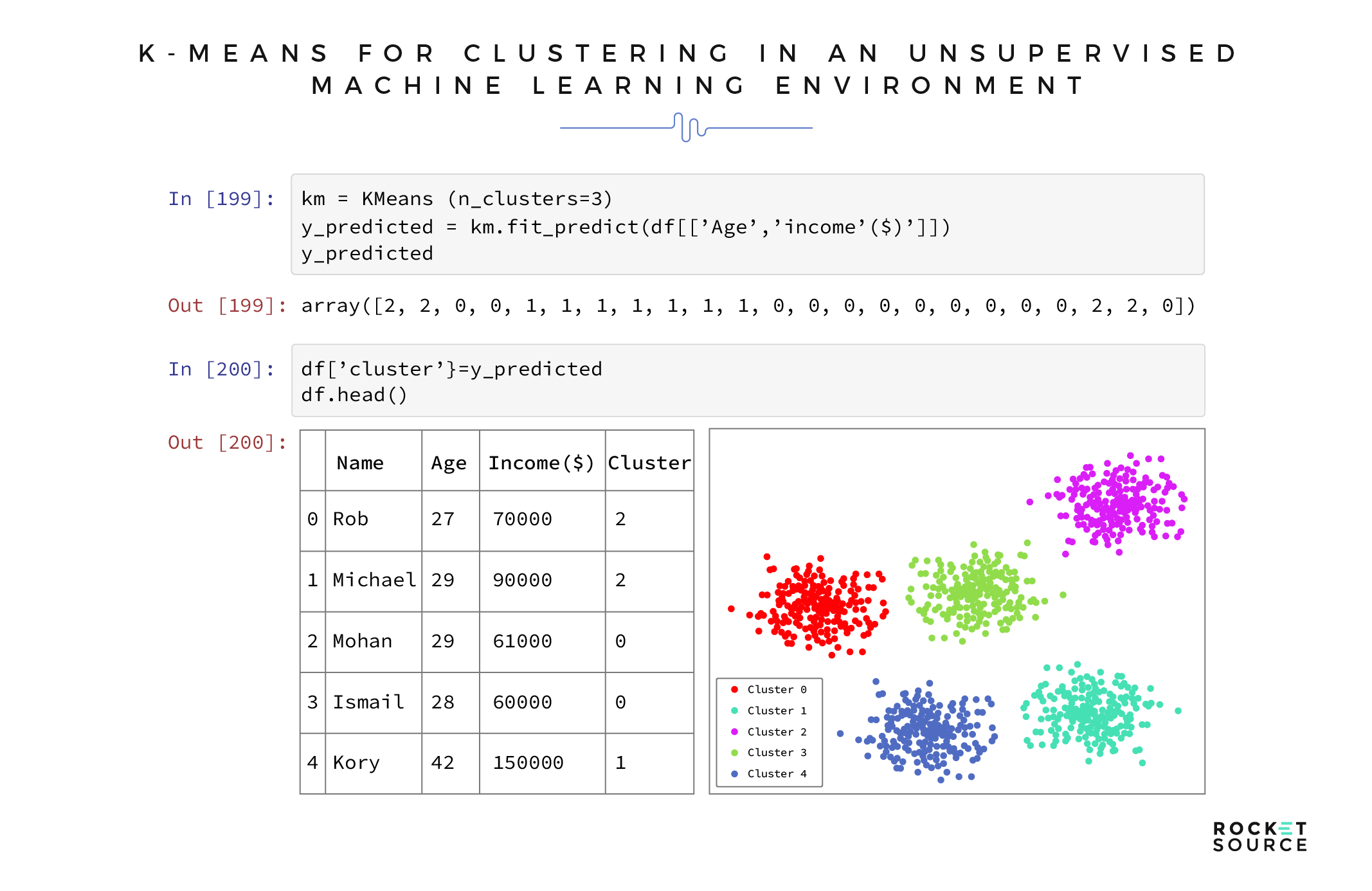

To illustrate this point, let’s continue with the lead targeting example. In an unsupervised learning scenario, we could apply a clustering algorithm to a list of leads with appropriate attributes or features to cluster each lead into N classes. The result would be that each record (lead) in the dataset would have an appended class number. It looks something like this:

Once we clustered out our various cohorts, we could work toward inferring the characteristics of each cluster using the association algorithm. This algorithm applies business value in the form of action. For example, we’d be able to identify purchase patterns from each of our various cohorts and drive initiatives based on that predicted behavior.

Semi-Supervised

Semi-supervised learning is a combination of unsupervised and supervised learning. As in a supervised learning environment, some of the data is labeled. Other data remain unlabeled and rely on the algorithm to draw conclusions from patterns within the data.

A semi-supervised learning environment can reduce the cost of storing labeled data while still offering algorithmic insight to formulate conclusions to solve business questions.

An example of what you can find in a semi-supervised environment is sentiment. By taking a labeled dataset around the general sentiment of a customer and aligning it with a larger unlabeled dataset of social verbiage, the algorithm can continuously predict whether a customer is happy or upset.

Once you know what you’re trying to solve for and the type of model that makes the most sense to build, you can start outlining your approach.

The Importance of Framing, Scope Development and Problem Definition in Machine Learning Models

Phew! We’ve made it through the technical side of things and are now onto one of our favorite aspects of machine learning and predictive modeling — framing the problem you’re aiming to solve.

Organizations aspire to build and deploy a multitude of machine learning models. Because there are so many, it can be difficult to know where to start. Some people argue that starting with top initiatives of the business or the most critical metrics is best. While it’s valuable to have identified a model, we argue that you must dig deeper by asking more questions up front, such as:

- How do you plan to identify the target metric for which you want to predict?

- How do you define the limitations of the target metric?

- What level of context and data breadth are you able to include?

- Is there any third party data we can leverage to add incremental value to the model?

- How can we assimilate insights (from both the development and deployment) of our model into the appropriate communication lanes, processes and organizational materials/literature to allow for digital economies of scale?

It’s imperative that you frame a question or objective so that it’s conducive to predictive modeling. To illustrate this point, let’s use the example of customer churn. After you determine that you have a problem with high customer turnover, what are your next steps? Yes, you want to build a model to help you understand which customers have left and predict which customers are most likely to churn, but before you can do that you must define churn.

As with so many elements of the modern marketing funnel, churn will vary depending on the business model. By definition, churn can happen across a range of transactions, such as regular product purchases, visits to a hotel, maintenance on a vehicle or renewing a subscription. No matter the case, the definitions and parameters specific to that given model are imperative. With the proper curation and configuration of the data, the ability to build and deploy accurate, relevant models increases. Further, ensuring that the appropriate stakeholders across the business are involved in this part of the modeling process will make the outcomes more relevant and increase the chances of adoption as the process matures.

Framing and adding context to your data and will give you more accurate results from predictive machine learning models.

This is an important point, so we’re going to consider a few different business models to specify what it means to frame a given question appropriately and define parameters accurately.

First up, let’s look at the definition of churn. Considering a subscription-based Software as a Service (SaaS) model, how would you define a churned customer? The easy answer is one who simply does not renew after expiration. But let’s deepen that definition. Is it considered churn when a customer doesn’t renew initially because their credit card had expired but then returns after three months with a new card? Probably not. On the flipside, consider a vehicle repair business model. How often would you expect to see customers and how do you define “regularity” in terms of non-canceled customers? If a customer doesn’t come in for their oil change after three months, do you consider them a churned customer? How about six months?

Another element you must define is customer tenure. What are the minimum and maximum customer lifespans to be included in or excluded from the historic data we’re training our model on? For example, an annual subscription-based SaaS business probably shouldn’t use a non-churned customer who has only been with the company for six months, because they haven’t reached the full lifecycle of an annual subscription yet, whereas a vehicle repair shop could.

Finally, you’ll need to look at the path-to-purchase each customer took before they churned. For example, if two customers went through different onboarding cycles when starting their subscription, their path-to-purchases would not be the same experience. You’d be comparing apples to oranges, which would skew the output of the model and the accuracy of the predictions. However, comparing similar paths-to-purchase would let you identify opportunities to reduce customer turnover.

Analytical Maturity and the Importance of Self-Awareness

Some things in life get better as they mature. Fine wines, people and analytics are a few of those things. As organizations deepen their data sets and analytics, they’re able to get more value from the information they have at their disposal, thereby becoming more mature and self-aware.

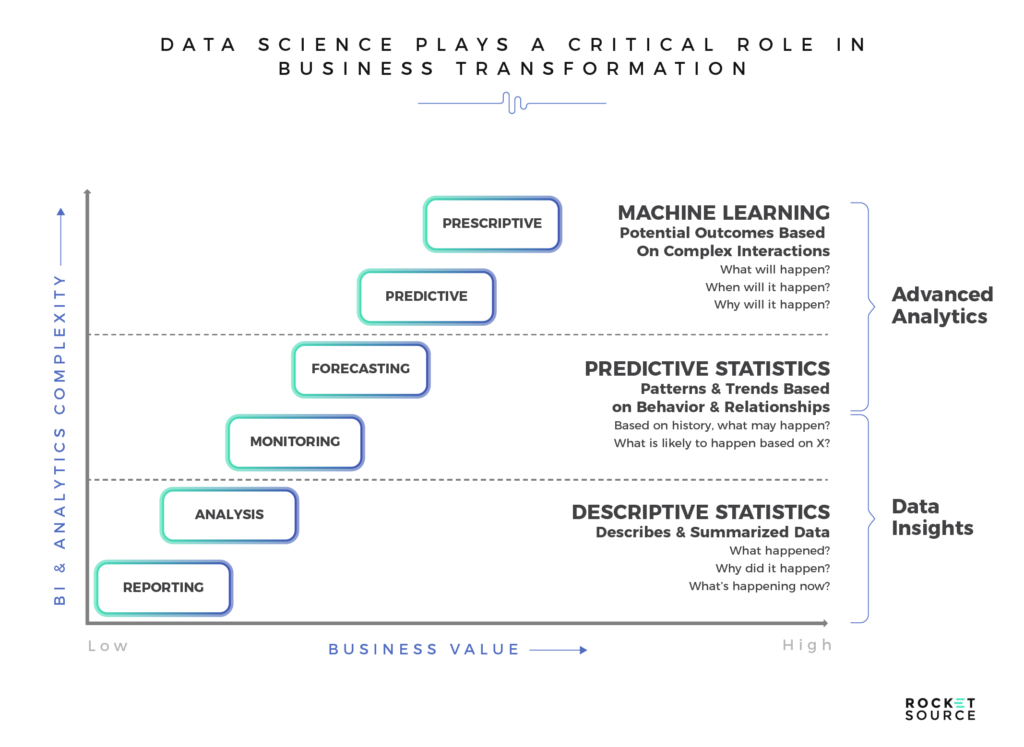

Every organization has a level of analytical maturity. Knowing where your company falls on the analytical maturity scale can help you understand what data is available and identify any areas you might need to improve to drive more business value from that data.

Analytical incremental climb in maturity is based on a multitude of factors spanning the cohesiveness of a business’s informational infrastructure and set of combinatory systems, which we talked about in-depth in our post about organizational growth via digital transformation. For the sake of this post, the big takeaway is this — having a fundamental understanding of current state versus future state is vital if you want to lay out short- and long-term strategies that drive incremental, digital improvement.

To drive that maturity requires you have a deep enough data set, which shows the expansion of given touchpoints. It’s these touchpoints and additional context which allow you to gain the type of insights needed to move away from basic reporting and analysis into an area where you can leverage predictive machine learning models.

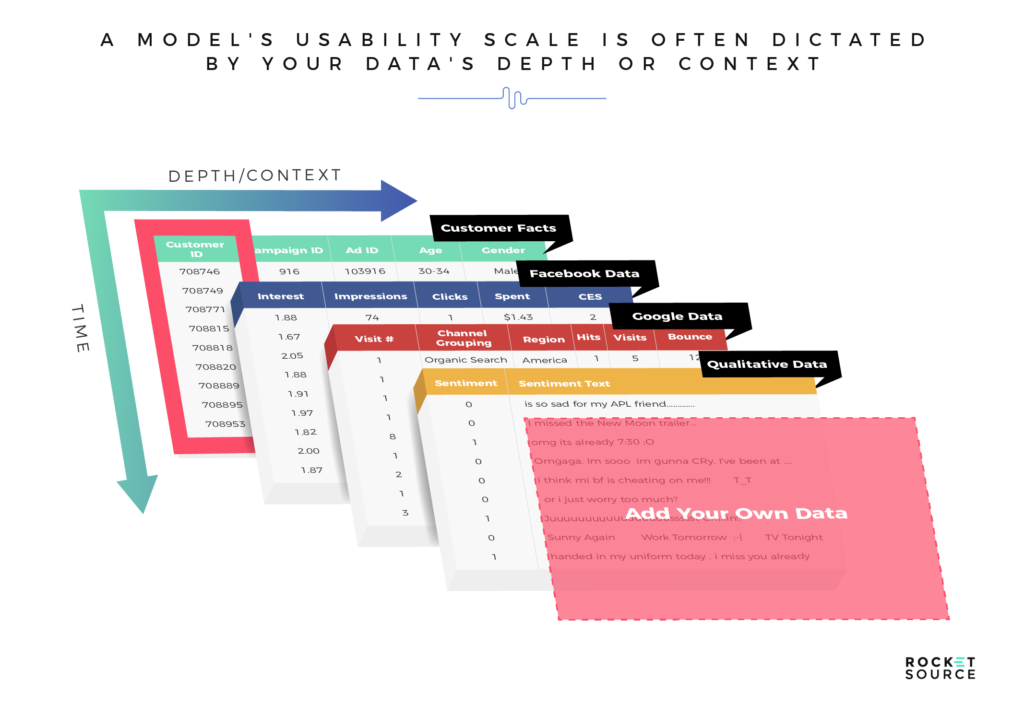

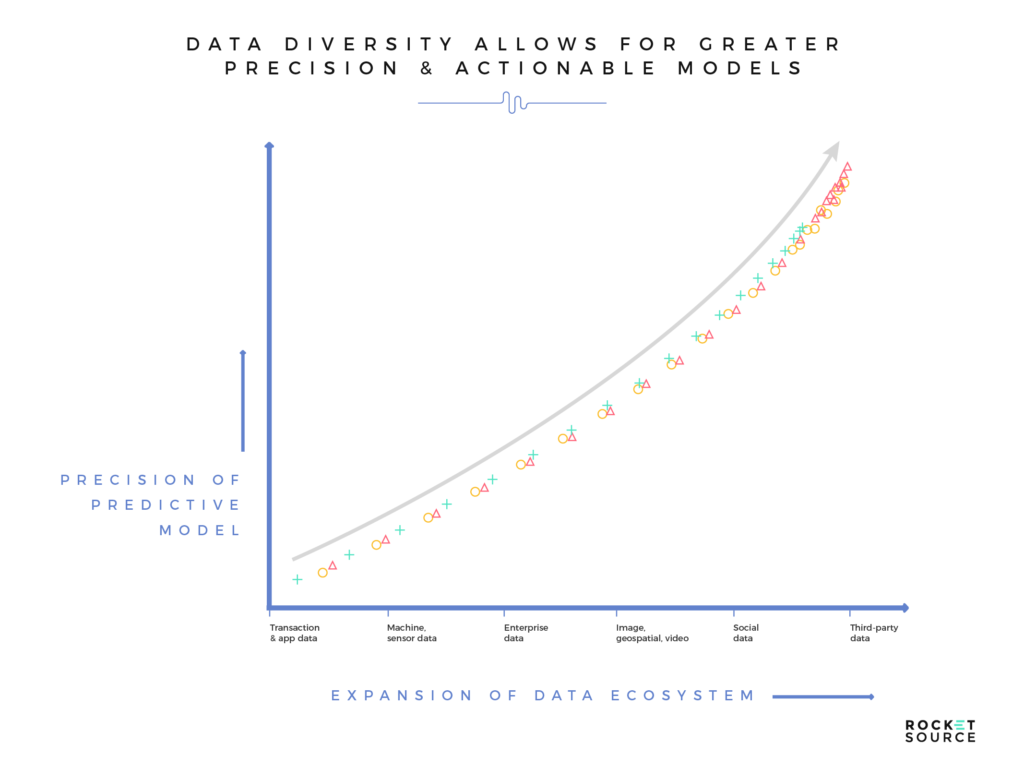

The more layers or dimension (known also as features) you’re able to add to your model’s data, the deeper you can go with your analysis. As a result, you’ll see better predictability, which in turn will bolster the accuracy and performance of a machine learning model’s output. This doesn’t happen without a noteworthy level of data diversity in the model. The more expansive or diverse the model is, the more robust the performance will be, both from a accuracy and actionability standpoint.

Any business wanting to increase their analytical maturity must develop strategies and frameworks that allow for incremental improvements by breaking down their existing organization into four key areas:

- Maintaining a consistent vision and correlating your informational infrastructure accordingly

- Championing the forward thinkers and digital rockstars

- Governing your informational infrastructure with intentional, relevance-based protocols

- Possessing incremental levels of depth and digital cohesiveness to catalyze innovation

Let’s look at each area individually.

Maintaining a Consistent Vision and Correlating Your Informational Infrastructure Accordingly

The goals of any organization can often be accomplished with real-time insights and a clear understanding of business performance at micro and macro levels. This understanding tends to come in the form of analytics and reporting. Time and again, though, we witness the ability of organizations to provide meaningful analytics being stifled by silos and misinformed data infrastructure and pipelines.

If your company’s informational infrastructure does not align directly to your short- and long-term goals or across the 3 Ps in your organization, your ability to achieve a meaningful level of analytical or digital maturity is limited. This limitation makes it difficult to maintain a consistent vision for your entire organization, which can create confusion and cause teams to lose focus. By correlating your informational infrastructure with your vision, you’re able to keep your company on track and drive more accurate predictions from your models.

Championing the Forward Thinkers and Digital Rockstars

Technological innovations are moving at lightning speed. This statement is true across your organization, but data scientists tend to feel it the most. New technology hits the scene daily, forcing your data scientists to drink from a firehose to keep up. Helping them, and your organization, stay ahead of the competition requires that you prioritize holistic modernization and training.

Companies champion their forward-thinkers and digital-rockstars are able to advance their businesses by way of analytics. Empowering everyone on your team to have a solid grip on what’s being done and why will keep the bow of your organization’s ship pointed forward.

Governing Your Informational Infrastructure With Intentional, Relevance-Based Protocols

The most intelligent businesses have long-term goals. Instead of going through one drastic change after another, they leverage analytics to make small incremental improvements over time. When taking this small-step approach, it’s imperative that a level of cohesive governance exists around the data and its corresponding processes and protocols. In other words, there should be systems in place to ensure that data is clean and relevant, while also assuring that there are change management and solicitation protocols.

One particular area for which data governance is critical is feedback loops. If you’re not using feedback loops yet, please, please, please start now. If it feels like we’re begging with that statement, we are. Here at RocketSource, we are huge proponents of feedback loops here at RocketSource. We have systems in place to allow data to be collected from internal and external users, and have designed informational infrastructure to use this data to maintain a level of relevancy and value to help propel the business in the right direction. This approach cannot happen without a properly developed governance strategy and framework.

Possessing Incremental Levels of Depth and Digital Cohesiveness

Likely the most important aspect of the last mile of any organization’s journey toward noteworthy digital maturity is the information (data) they have at their fingertips. We talk often about the context in data. What we mean is that the more information you can give to the data set — or the wider it is — the more insightful that data becomes. Wider data sets letyou zoom out to see the forest, not just the trees.

The insights afforded by wider datasets tend to result in more action-based strategies. That’s because decision makers can get an accurate, precise, clear view of what’s happening in and around all that big data. But getting context and accuracy around your data requires a cohesive digital infrastructure of your data repositories. As we dive further and further into a platform-rich world, data is being accessed from multiple places and instruments. The higher the disparity of systems, technology and data across an organization, the less context and depth in its data. Combining repositories and leveraging a solid framework to deploy your models ultimately dictates just how insightful you can get.

A Framework for Deploying Machine Learning Predictive Models

When pulling together the framework to deploy a predictive model, it’s imperative you have your 3 Ps in place. Remember those from earlier in this post? They’re your people, processes and platforms. In addition to the 3 Ps, your data pipelines, data governance and digital infrastructure must be properly built out. This proper build out can’t happen without using the appropriate operationalization precepts.

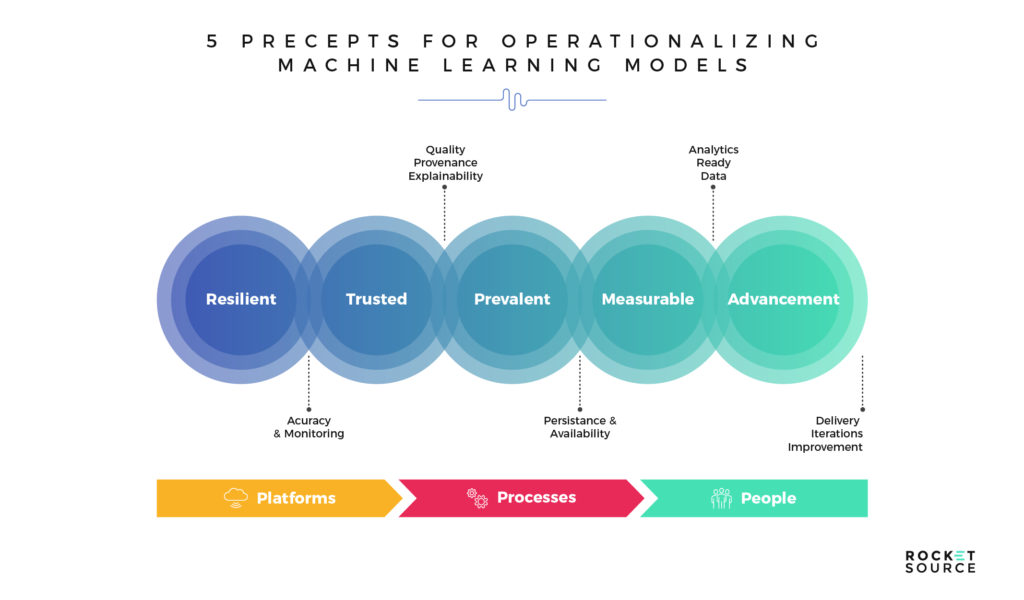

We try very hard to avoid excessive jargon around here. When we say ‘operationalizing’ your machine learning models, we simply mean setting concrete parameters around how you put your data in motion and then leveraging and disseminating those parameters across team structures. These parameters turn fuzzy concepts into something more concrete. As you start to wrap your head around how you’ll use your machine learning models to drive action in your organization, these are the general parameters you’ll want to stick to throughout the process — resiliency, trust, prevalence, measurability and advancement.

Resiliency

First and foremost, the informational infrastructure you have in place must be resilient against any disruptions, such as accidental tweaking of data sets due to a lack of data governance or delayed and disjointed third-party collection methodologies.

As your data arrives from outside sources, platforms or methodologies, it must remain clean as it gets pushed through the data pipeline. By ensuring resilience up front, you’re in a better position to recover from any possible disruptive events in a timely fashion.

Before deploying any machine learning model, you must ensure resiliency in your informational infrastructure.

Trustworthiness

In addition to having a resilient pipeline, you must ensure the data arriving on the other side is trustworthy. As the saying goes, dirty data in means dirty data out. It’s critical that you have parameters in place to ensure you’re leveraging accurate data sets to drive accuracy in your modeling. It’s only through trustworthy data that you can infer the results of a given model by a business user.

Quality is key when it comes to achieving a meaningful level of trustworthiness in the data. By quality, we don’t just mean accurate data. We mean relevant data too. This relevancy comes as a result of having an expansive context around your data sets, as we discussed above. Knowing where your data originated is critical, even if it’s immature when it first arrives in your pipeline. The goal is to ensure that the features and outputs of a given model have (or are) valuable and relevant in a given business context. This context allows data scientists to make the data and the model’s findings explainable to a business layman, dramatically increasing the likelihood that the model will add value in a business setting.

The ability to explain a given prediction, engineered feature or a fit statistic in a machine learning model is critical.

Prevalence

Think of your organization as a beautiful tapestry. In order for the picture on the tapestry to have meaning and clarity, data must be leveraged consistently and cultivated regularly for newfound insights that drive improvement and innovation. It’s here that the 3 Ps really come to light.

The people of an organization must understand how specific data can facilitate informed decisions and a more optimal day-to-day work environment, such as given OKR achievements or marketing milestones. The processes of an organization can continually be made more efficient, reducing wasteful steps while still enhancing profits. In order for this to happen, the most critical and insightful data points must be assimilated into the key processes of a business. This assimilation must happen in a way that feels natural and non-disruptive to the end-user. In other words, you can’t ask your people to change their normal operational behavior to consume new reporting and expect stellar results. Instead, having a well-documented set of processes and inter/intra relationships across the organization will help properly articulate the who, what, where, when and why of marrying an organization’s operations with its informational infrastructure.

If you skip this step, you’ll miss a giant opportunity. Infusing a level of familiarity and proficiency in using data to make decisions and take action in a regular business setting is a foundation that every organization can lean on when adding advanced analytics and predictive modeling to daily operations.

If data are not prevalent across the inner-workings of an organization, there’s a limited ability to mature to a point at which machine learning models are being leveraged.

Measurability

Ultimately, there comes a point at which the business has to define measurable outcomes at both macro and micro levels. It’s this measurability that helps demonstrate the ROI of a machine learning model and gain buy-in across the organization. Without the ability to leverage data to measure performance against outcomes, it’ll be harder to get your team on board with the cultivated, direct and tangential insights that are byproducts of machine learning models.

It’s important to note here that data as an informational asset is not what propels an organization into analytical maturity. But when specific, measurable definitions are created such that the data can be leveraged to drive transparency and context around critical metrics, the ability to move faster and in a more informed manner becomes reality.

Performance measurement improves the overall effectiveness of an organization’s core operations and gives way to tangible results.

Advancement

The advancement of your organization’s informational infrastructure only happens with the proper development and configuration of a few critical areas — your data, rules, platforms and architecture.

First and foremost, you need to have proper data collection methodologies in place. Remember that we said feedback loops were so critical? Yeah, we meant it. It’s crucial to have feedback loops in place to validate the existing data and provide the information necessary to optimize and improve the most pertinent areas of the business.

Once you have your data, defining semantic rules for your data sets ensures consistency and appropriate usage. These rules drive data governance, keeping your teams aligned so data remains clean and trustworthy. The platforms and technologies being leveraged must also be properly and consistently cited and managed. If platforms and technologies are not used and their capabilities not maximized, it’s very difficult to realize the value of an appropriately configured informational ecosystem and infrastructure. To this end, the architecture of the overall set of technologies, infrastructure and operations must be continually iterated upon to let growth happen in a natural way.

Advancement only happens after your data, rules, platforms and architecture are properly developed and configured.

The way you engineer your data matters. If your data sets are not prevalent and pervasive, it will be nearly impossible to continuously make the most relevant data available to the model, which can skew your team’s ability to measure outcomes and advance the organization. Bringing it all together with these five precepts (resiliency, trustworthiness, prevalence, measurability and advancement) in place ensures forward momentum for your organization — but it takes the right people in the right seats on the bus to push down on the gas pedal.

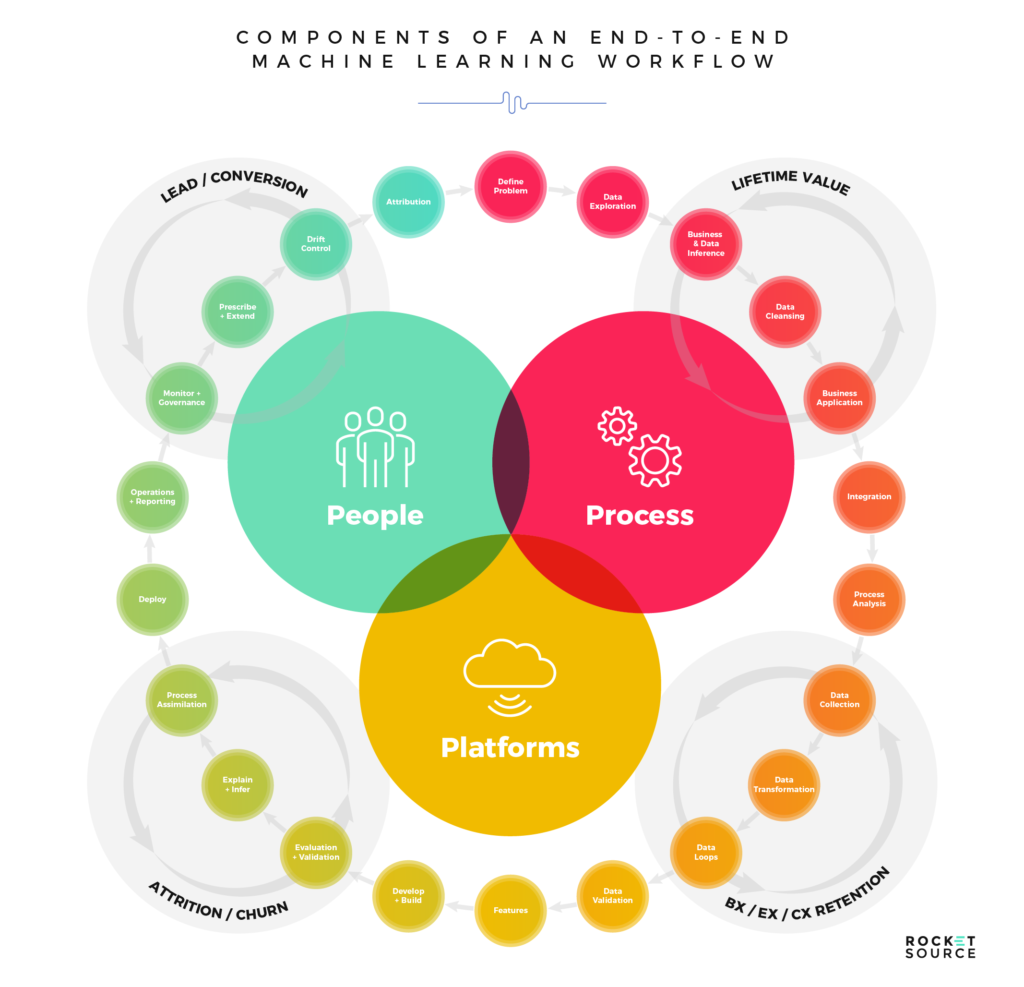

Successfully Deploying Machine Learning Models

There are various opinions and assertions out there regarding the end-to-end process of building and deploying predictive models. We strongly assert that the deployment process is not a process at all — it’s a lifecycle. Why? It’s an infinite process of iterations and improvements. Model deployment is in no way synonymous with model completion. We will go deeper into the reasons for this in the section below as we address the requisite steps for operationalizing a model, but the high-level post-deployment steps are called out in the following diagram. Here’s what that deployment looks like in action:

Validating Your Machine Learning Models With Fit Statistics

Once a model is built, inferring the results is imperative. When we refer to model inference, we’re actually addressing the importance of understanding why a model is performing in a specific way. This drills down into the takeaways of critical fit statistics that lead to proper business translation and, ultimately, to a fundamental, mathematical, technical and data-level understanding of the model itself. Knowing this will serve you well when maintaining a model in the wild. To this end, it’s imperative you’re able to analyze the model’s fit statistics and metrics in an educated and informed manner. These metrics will tell you how effective your model is at making predictions around your specific business outcomes.

An important note before we dive in: in this section, we’re addressing the more common fit statistics specific to the models at the end of the post, which are all primarily supervised learning on structured data, and which range from classification to continuous numerical (regression) problems. There are specific commonly calculated fit statistics for each model type.

Fit Statistics for Classification Models

A classification model involves predicting a class, such as labels or categories, by mapping a function (f) out of input variables (x) to output variables (y). For example, you can classify a woman as either being pregnant or not being pregnant. There is no in-between.

To know if the model is giving you the most accurate results (i.e., not spitting out false negatives or false positives) you must analyze your predictions using one or more of the following metrics:

- Confusion or Error Matrix

- Accuracy

- Recall or Sensitivity to TPR (True Positive Rate)

- Precision

- Specificity or TNR (True Negative Rate)

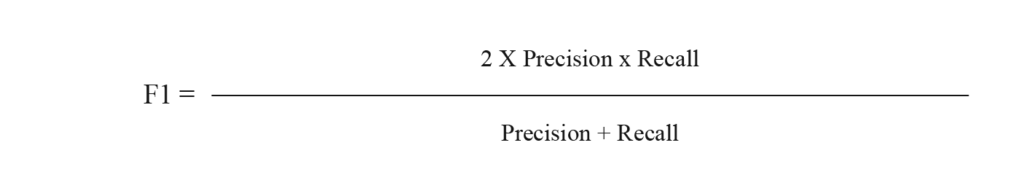

- F1 Score

For the sake of this post, we won’t go into each of these in-depth. However, if you’re running a classification model, it’s critical that you analyze a variety of these metrics to determine whether your model is producing accurate, precise results you can confidently rely on to predict the direction for your business’s success.

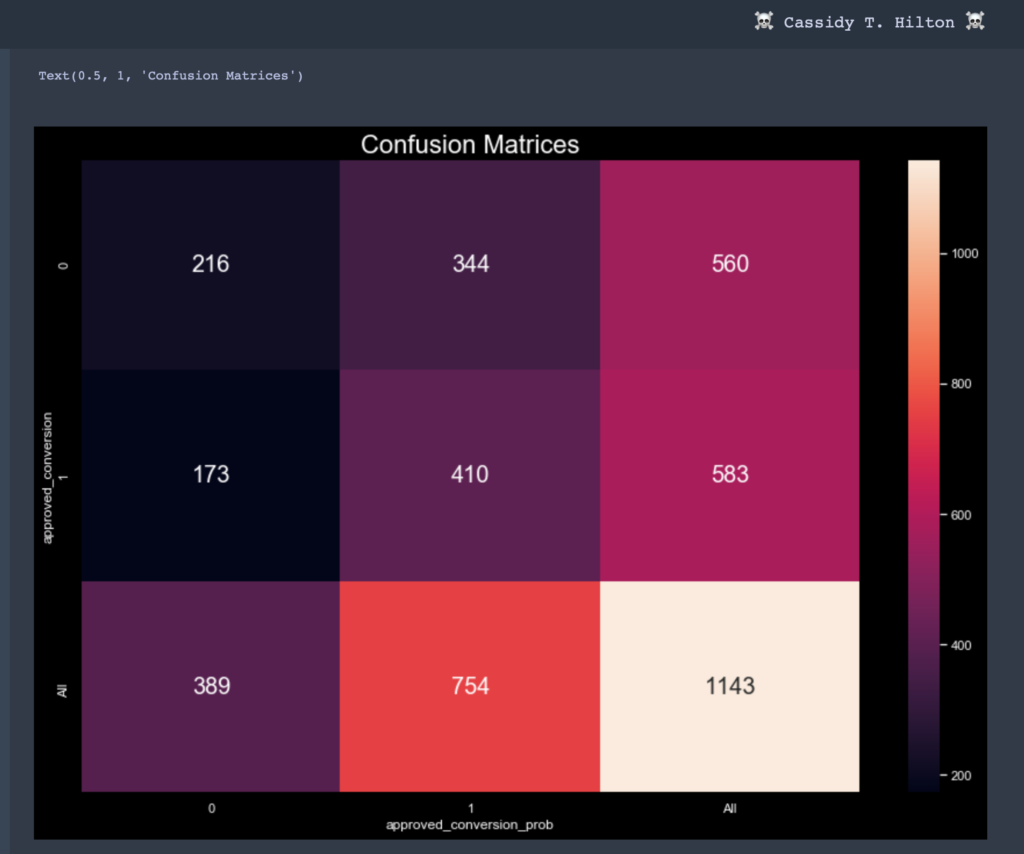

Confusion Matrix

A confusion matrix is a table that articulates the performance of a classification model. The graphic is called a “confusion matrix“ because it quickly makes clear where the algorithm is confusing each class.

If you’re not used to reading a confusion matrix, here’s a quick rundown of what it measures:

- True Positives (TP): the actual class of the data point was True and the prediction is also True

- True Negatives (TN): the actual class of the data point was False and the prediction is also False

- False Positives (FP): the actual class of the data point was False and the predicted is actually True

- False Negatives (FN): the actual class of the data point was True and the predicted is False

Depending on the model, business goal and overall scenario, you will likely need to adjust certain areas of the model to minimize false negatives or false positives. There are several instances when it’d make sense to take this route. Let’s look at two.

First, consider that you’ve just set up a lead qualifications model. With this type of machine learning model, you’ll probably want to minimize false negatives because the goal of the model is to bring you qualified leads. Your sales team would rather have more leads to work with than fewer. If your model is filtering out leads as “not likely to convert” when there indeed may be a chance that a lead would convert, you’ll miss out on potential sales.

On the flip side, you’ll likely need to minimize false positives when predicting the likelihood that an individual will default on a loan. If you’re a lending institution, you don’t want your machine learning models to tell you someone is likely to default when in fact they’re not. That’s known as a false positive and could be a colossal miss if either the loan amount or the benefit to the lender is sizable.

As you filter out false negatives and false positives, your models become exponentially more valuable to your business goals. But the confusion matrix is just the beginning. There are other calculations and measures you can take to continue making your machine learning predictive models more spot on.

Accuracy

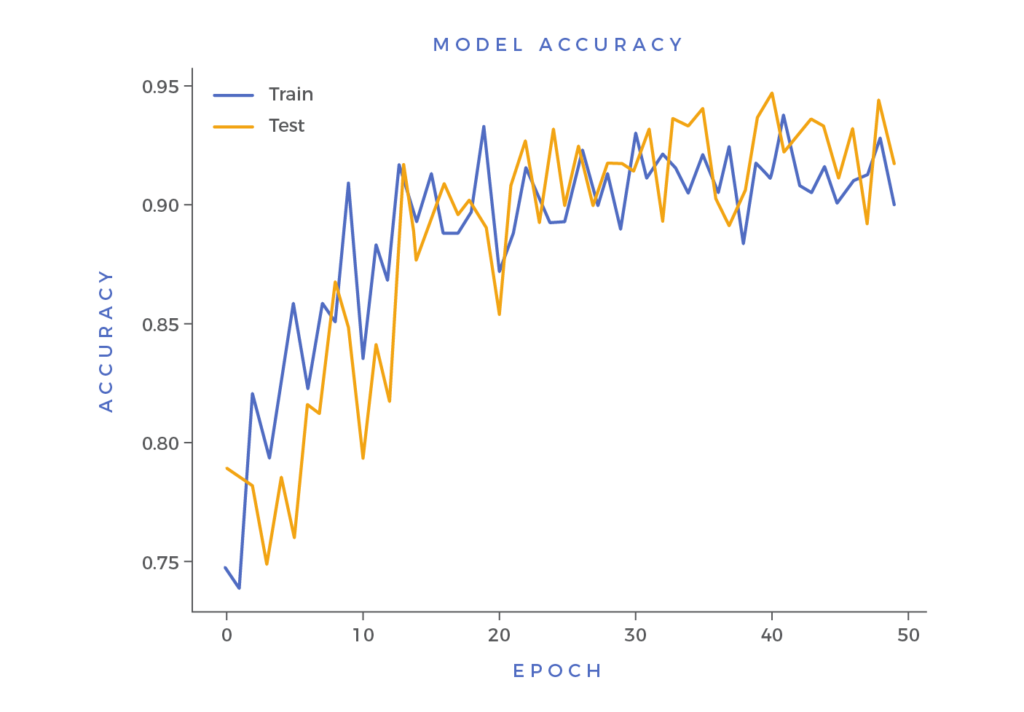

Accuracy is, in its simplest form, the percentage of total predictions that are correct. This fit statistic is a basic, but invaluable, measure of prediction quality. As you train and test the model, you’ll get values between 0 and 1, which will then be plotted on an accuracy chart that looks like this:

The higher the number or score, the more accurate the model. This metric gives us a fundamental idea of how our model would perform in the wild. Obviously it doesn’t make sense to lean on a model with limited accuracy, so it’s critical to test up front.

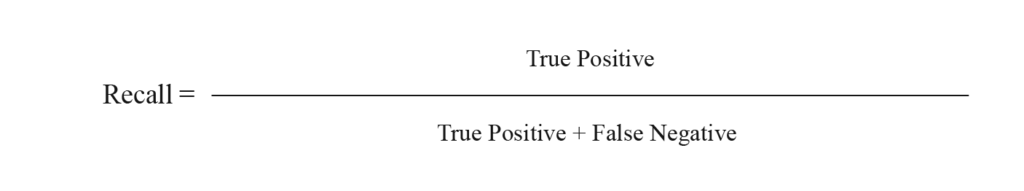

Recall (Sensitivity)

Recall is the number of records that are identified, or predicted, as positive out of the total true positives. This metric provides us an understanding of a classifier’s performance regarding false negatives, or total missed records. The importance of examining Recall is less about the identifying or predicting critical records correctly and more about giving companies more to work with. If we simply just said every lead is “likely to convert,” we would have 100% Recall but not a lot to work with.

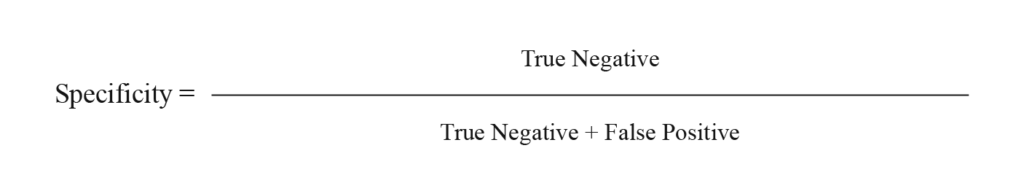

Specificity

Specificity is the opposite of Recall. This fit statistic looks at the model’s True Negative Rate to determine its ability to correctly classify negatives. The result tells us the total items correctly classified as negative out of the total number of negatives.

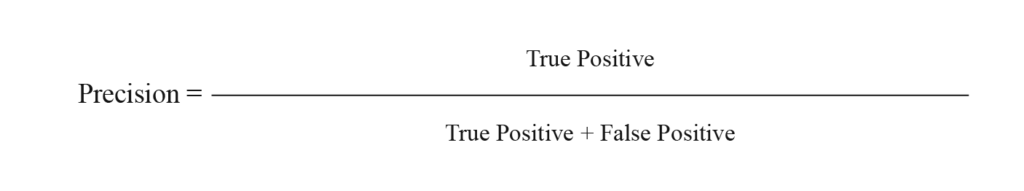

Precision

Precision indicates the model’s ability to be precise in its results. It works by calculating the number of records correctly identified or predicted as positive over the total positive items — both true positives and false positives. This fit statistic provides us with an idea of our model’s performance by looking specifically at the total number of false positives it captured. We want precision in our models because they can help predict, and ultimately help prevent, scenarios with serious implications, such as a case of vandalism, terrorism, mass shooting or illness. The higher our Precision metric, the better our chances of stopping these and numerous other situations before they occur.

F1 Score

While all of the above fit statistics can be leveraged to make informed and nuanced business-level assertions about a given problem or model, it’s often nice to us, as machine learning engineers and business strategists, to aim and optimize our model around a single number evaluation metric — the F1 score.

The F1 score (also known as the F score or F measure) is a measure of the overall accuracy of a classification model. It is a classifier metric that calculates the harmonic mean (weighted average) of precision and recall in a way that emphasizes the lowest value. There’s no golden rule about whether a high F1 score is good or a low F1 score is bad. That’s because there are many factors that can influence the score, so it’s important to take context into consideration when using this metric. For example, classifiers with imbalanced precision and recall performance, like a petty classifier that has a tendency to simply predict a positive class, are at a disadvantage here. Models with a high precision score and a low recall score will achieve a low F1 score. Ultimately, the purpose of the F1 score is to reach a balance between Precision and Recall, especially for those cases with a less than ideal distribution of classes.

Fit Statistics for Regression Models

A regression model involves solving a problem contextually with a range of values by estimating a function (f) from input variables (x) into continuous output variables (y). For example, a machine learning predictive regression model would be able to tell you the dollar range a house will sell for. Note that some algorithms include the word “regression” in their names, such as linear regression and logistic regression. It’s important to know that each of these algorithms is used for a very different purpose. Linear regression is a regression algorithm, while logistic regression is a classification algorithm.

When analyzing whether this type of machine learning prediction is accurate, we must look at the baseline output of the model. There are a variety of statistics we can use to determine how accurate, and therefore how reliable, the model is. Some of the most common ones include:

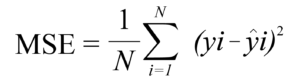

- Mean Squared Error (MSE)

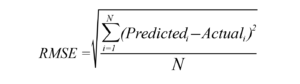

- Root Mean Squared Error (RMSE)

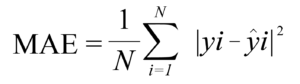

- Mean Absolute Error (MAE)

- R Squared (R2)

- Adjusted R Squared (R2)

Mean Squared Error

Mean Squared Error (MSE) is, for all intents and purposes, the average squared error of a model’s predictions. For each point, it calculates the square difference between the predictions and the target and then calculates the average of those values. The higher this metric, the more poorly the model is performing. This metric will never be negative because the individual prediction errors are squared. Still, the lower the metric, the better the model — the metric for a perfect model would be zero.

Root Mean Squared Error

Root Mean Squared Error (RMSE) is simply the square root of MSE. We turn to RMSE instead of MSE when the risk of large errors is especially undesirable. Because RMSE and MSE are analogous with regard to the minimizers, every minimizer of MSE is then also a minimizer of RMSE. Conversely, each minimizer of RMSE is a minimizer of MSE since the square root is a non-decreasing function. To illustrate why this matters in a business setting, let’s consider an instance involving two lists of predictions, X and Y. When prediction X is greater than prediction Y, we know this will be true for both MSE and RMSE. The same relationship is also maintained in the opposite direction. Running RMSE will only help to hone in on how much greater the chances are of X happening over Y.

Mean Absolute Error

Mean Absolute Error (MAE) is calculated as the average of absolute differences between the target and the predicted values. MAE is a linear metric, which means that each of the individual differences is emphasized equally across the average. For example, the difference between 200 and 0 will be twice the difference between 100 and 0. As stated above, this is not the case for the RMSE, for which scale and parity are the focus.

MAE helps uncover the costs in relation to large errors, though not to the degree of MSE, which is more hypersensitive to outliers. For this reason, we’ll often turn to MAE during strategic planning, industry and demand forecasting, and accounting/finance, when “errors” are taken at face value and the cost associated with them isn’t quite as high.

R Squared (R2)

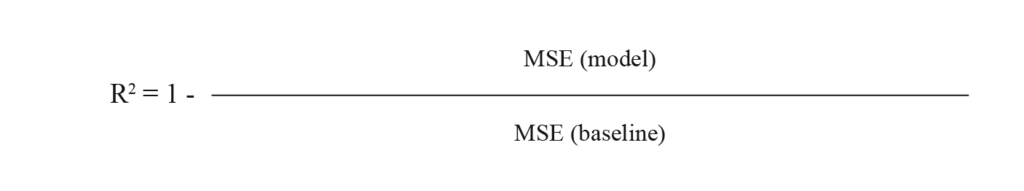

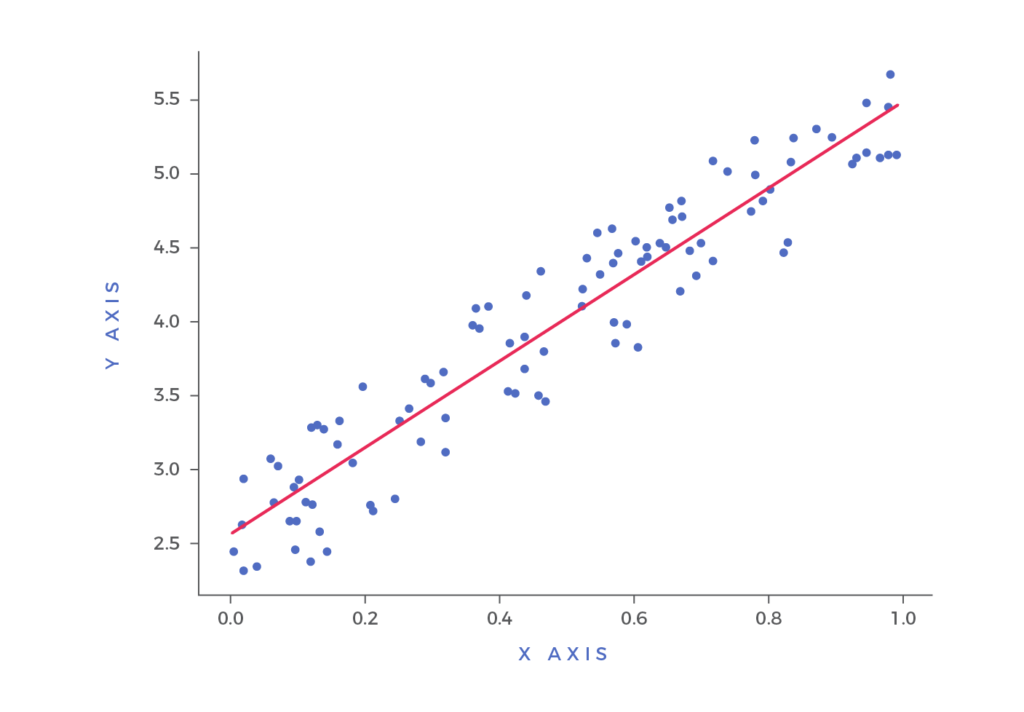

R² (sometimes referred to as “R-Two”), or the coefficient of determination, is another fit score we sometimes lean on when assessing a model. R² is the ratio between the quality of fit versus the naive model mean, which is essentially how well a given models data points fit a function or line with curvature. If this sounds a little bit familiar it’s because the coefficient of determination is closely related to MSE. A noteworthy difference here is the scaleless characteristic, which means that it doesn’t matter whether the output values are very large or very small.

There’s a slight misconception that the confines of R² results is between 0 and 1. Even though we typically only plot R² from 0 to 1, the minimum value can actually be minus infinity. This is important to note because, in the rare instance that R² is negative, we’ll know that the model’s performance is even worse than simply predicting the mean. Consider a poorly performing model that has large negative value, even when the actual targets are positive. In this context, R² will be less than 0. While this circumstance is fairly unlikely, the possibility exists and should be noted as you leverage this fit statistic.

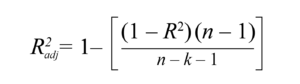

Adjusted R Squared (R²)

While R² articulates how a model’s points fit a function (the line you see above), adjusted R² also indicates how well those data points fit a line, but also adjusts for the number of records or data points in a model. The more poorly proportionate or performing the variables in a model are, the lower the adjusted R² will be. Inversely, the more useful variables, the higher adjusted R² will be.

It’s important to note here that adjusted R² will always be less than or equal to R². The main difference between Adjusted R² and R² is that adjusted R² will penalize separate variables that do not fit the model. This is because, in a typical regression situation, there are often cases in which it might seem to make sense to add extra variables as they come up. However, random variables can sometimes result in a broad range of significance which, in the case of adjusted R², will be compensated for by penalizing additional variables.